In general, to get the worldspace axes, you'll need to chase up the hierarchy to the top, applying each parent.

Its kind of a pain..

Posts

-

RE: How to get current active axis?

-

RE: PointLight lumen power

@andybot said:

what are your camera settings for vray? The default setting in vray is for a sunlit exterior scene. A light bulb in a box is very dim compared to sunlight, and the camera exposure will reflect that.

Yes, making the shutter speed 1/4th second brightens it up a lot (and for Thea too), so it looks like thats the problem: the ISO and/or shutter speed multipliers need to be larger.

I guess I'm surprised it would take a quarter second exposure to capture a light bulb.. live and learn.

-

RE: Video Materials

@jiminy-billy-bob said:

Whow, that's awesome. Good job!

Maybe a quick summary on how you did that? (Broadly)

Its a streaming video decoder that plays a video of your choice into a SU Material of your choice.

The difference is that it is true streaming so unlike gif or 'film strips' does not need to all loaded into memory.

Simple in principle but turned out to be tricky to get working. -

Video Materials

While at Basecamp 2014 I rashly claimed that it couldn't be that hard to add video streaming materials to SketchUp.

I believe fermented beverages may have been consumed.So here's a quick peek of where I'm up to - it runs entirely in the background so does not effect any Sketchup usage.

-

RE: PointLight lumen power

Hi Frederik (and anyone else), please don't take this thread the wrong way. This is not about showing LightUp to be great / scoring points or whatever. I hope we can have adult technical discussion here.

I was just surprised - as I'm sure others would be - as to why when entering what I thought were reasonable values for f-stop and shutter speed - as in the kind I would use with a real camera - I get such a dark image.

And yes, I realized the Maxwell sphere was making the surface a little (but only a little) closer to the box surfaces.

I'm looking to be educated here. I haven't built a real 2m box and placed a 75 Watt bulb in there - but finger-in-the-air, I would expect it to be quite bright in there.. No? So I was expecting the renderers with physical camera controls to give a result of a brightly lit box.

I see I am mistaken because they're very dark. But why? Can you explain?

Adam

-

RE: PointLight lumen power

@oxer said:

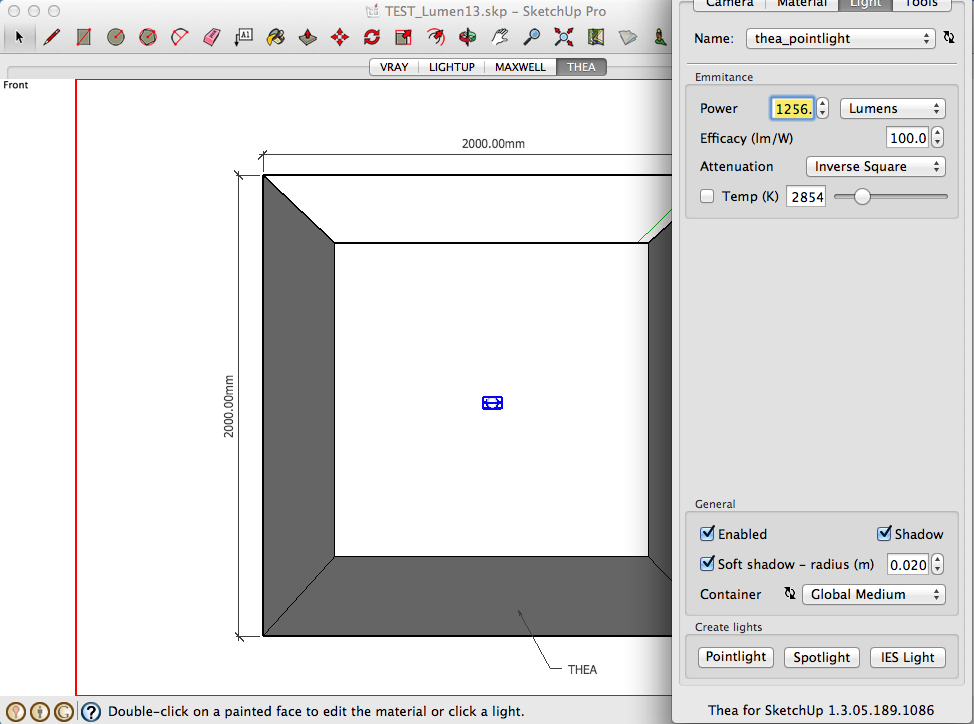

Giannis (Development Core Thea) wrote in Thea Forum this:

@unknownuser said:

I am writing here the requirements for a correct testing and comparison:

-

Scene scale and geometry must be the same. Light model the same (point light always).

-

Material reflectivity and model must be identical. Selected material model should be Diffuse (everything else varies from renderer to renderer), RGB color set to white (to avoid reverse gamma correction; even better use spectral color for reflectivity).

-

Display settings that matter. Assuming no CRF with brightness=1, gamma=2.2, the following settings must be the same:

- ISO

- Shutter Speed (found in Thea display panel)

- f-Number (found in Thea display panel)

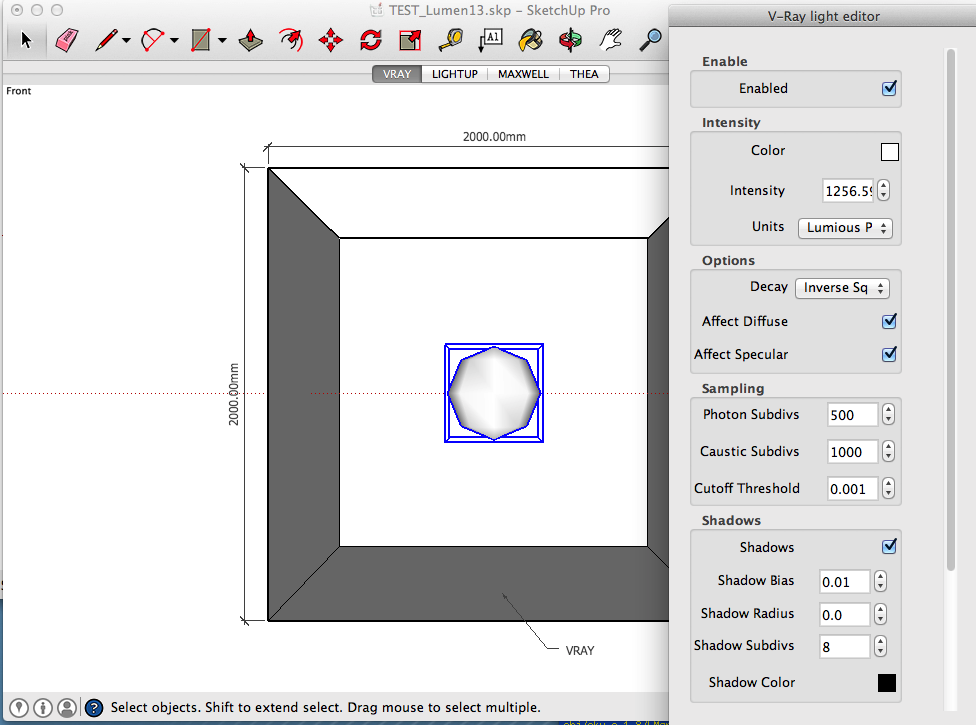

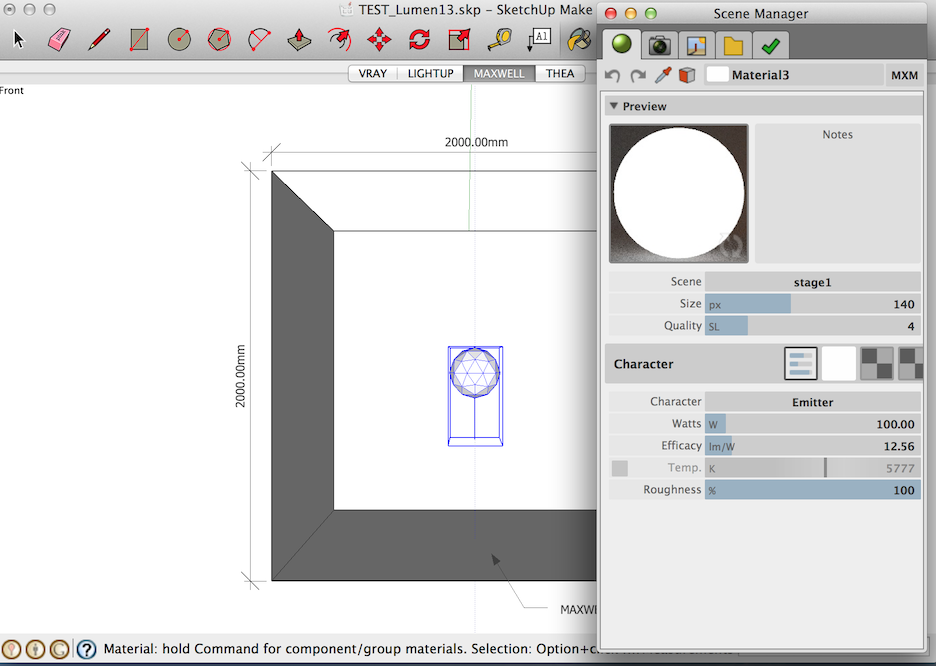

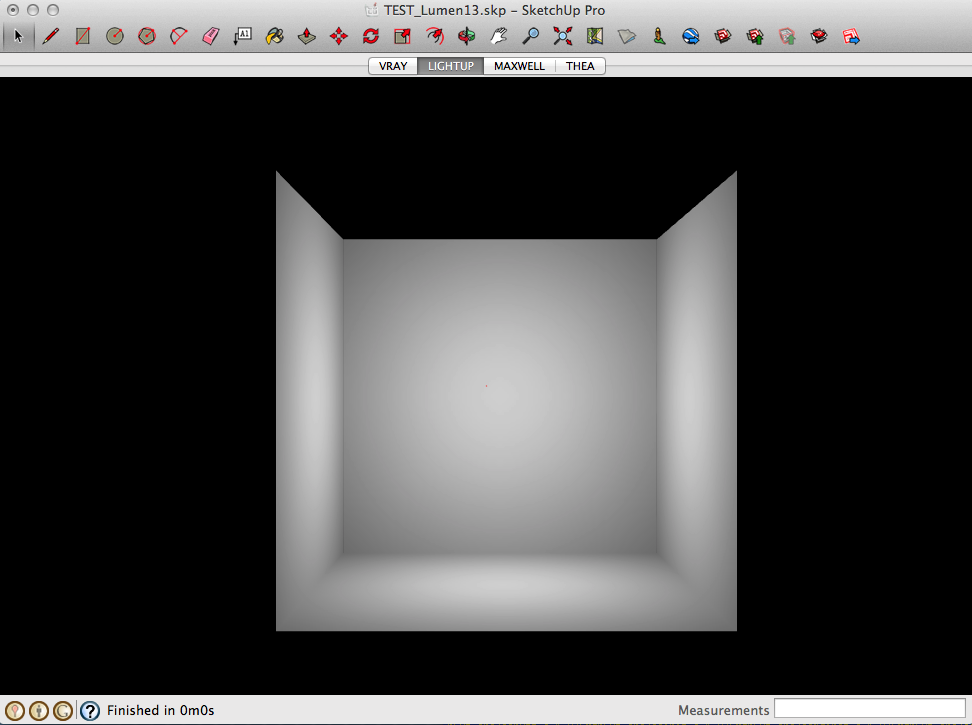

Sounds good. This is the model I'm using:

TEST_Lumen13.skpA simple empirical test would be to stick a bulb in a 2m box and take a real photo (with known film, f-stop and shutter speed) and compare to the emulation.

-

-

RE: PointLight lumen power

Yes, I understand film speed is just one element. However with ISO 100 and some 'regular/default' camera settings of say, f8, 1/16s, I'd expect to see something..

I get if you keep the shutter open for a second, you're going to get a bright result.

I think your link is broken..

-

RE: PointLight lumen power

@pixero said:

Thanks for bringing this up Adam. Very interesting.

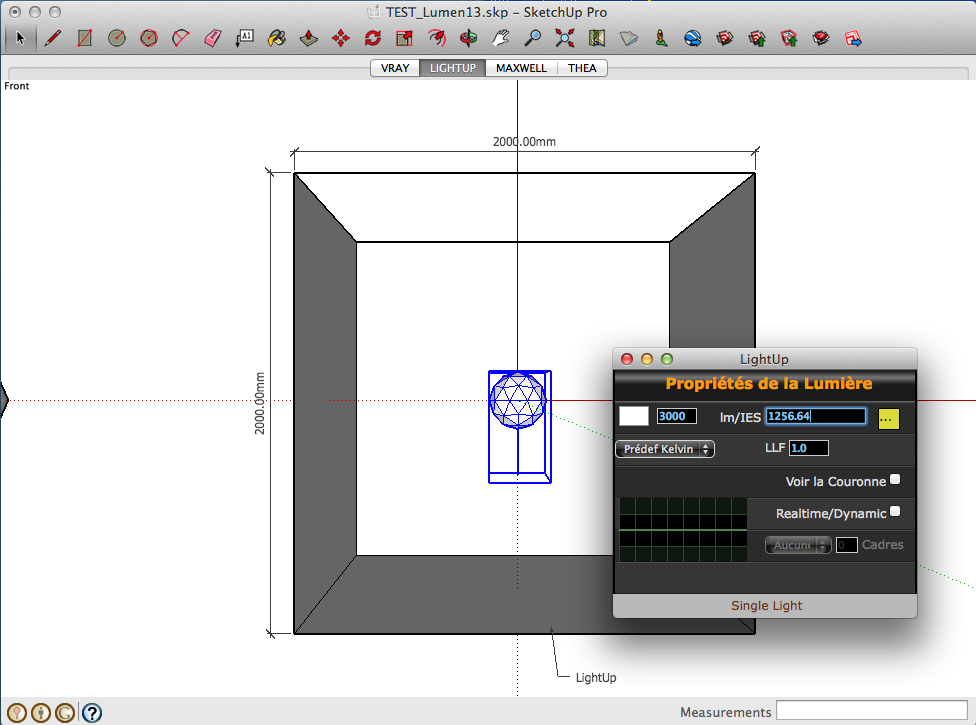

Does anyone know any good referens values for different lights?IES files are definitive because they are real measurements produced in a lab.

The reason for choosing 1256.64 Lumen is this results in precisely 100 lx at 1m away - not my opinion or preference, its just how Physics works.

@oxer said:

Like Thea is a physical based engine, the camera settings are identical to the real camera and this is very important in the final result of the render, so to compare the different render applications, you should use the same settings in all, not only the same ISO.

Surely a 75 Watt bulb in a small box photographed with a real camera loaded with ISO 100 is going to be brightly lit.

So if Thea is a physically-based engine then this is what we should see. Whereas this test appears to give a very dark box which does not match "physically-based cameras" in any way.As I commented, I may well have overlooked something here. But surely "physically-based" rendering engines should give results that are a bit like a physically-based world?

Adam

-

RE: PointLight lumen power

Thanks for the tip.

So I don't doubt you can multiply up the render to get a brighter output.

My point was that with the standard ISO 100 "film" setting and a 1256 lumen Pointlight (roughly approximate to an old 75 Watt bulb) in a 2m wide box should be pretty well illuminated. Yet it comes out dark..

So the question is why does a 1256 lumen light not illuminate the box as expected?

Adam

-

PointLight lumen power

Bit of Background: There was a thread on a SketchUp FB page about having to artificially multiply/boost IES lights to get them to show in vray. Now editing an IES file really doesn't make any sense as its real measurements of a real light source. If you're not getting enough light in your scene, you've got the wrong luminaire!

In my book, the only valid multiplier to be applied to IES files is LLF (Light Loss Factor) - but lets leave that for another time...

OK. It got be thinking so I looked at 4 popular renderers handling of physically-based units of light.

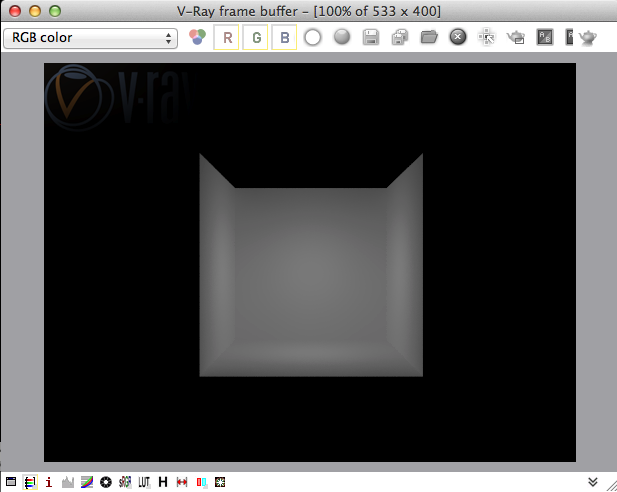

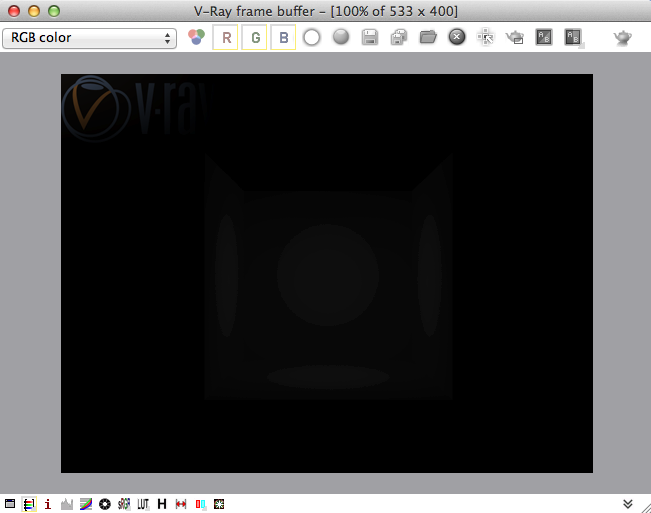

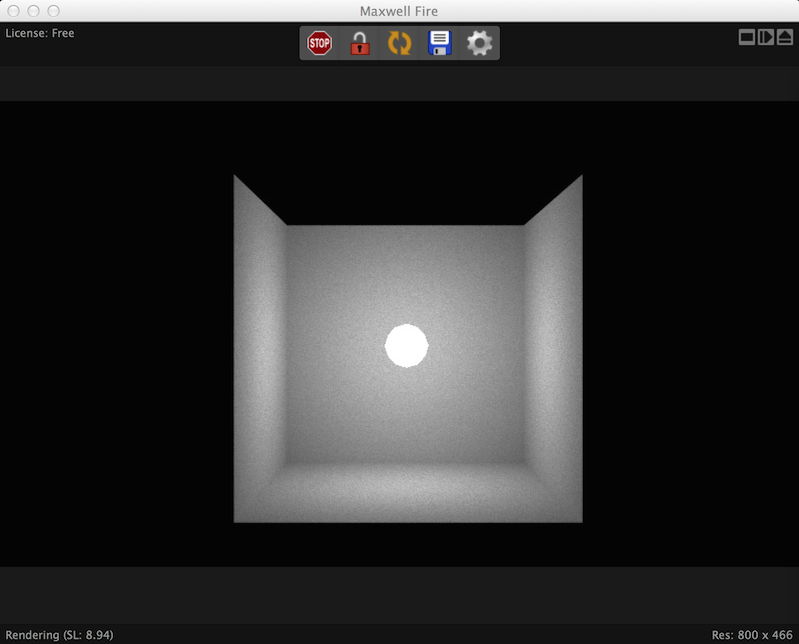

I tested: Maxwell, vray, LightUp and TheaThe test was a PointLight (aka Omnilight) source of 1256 Lumen at the center of a 2m box. Each surface is thus 1m away from the source at its nearest point.

The surprising results are vray and Thea seem way off on this - and I guess that would explain why the IES files users are having to manually boost to get them bright enough. Maxwell looks correct as does LightUp (which I knew already because it passes the CIE tests on this).

Its entirely possible I've simply missed a critical setup in vray or Thea as I'm no expert - but I can't see anything obvious. So is this known about?

Adam

-

RE: [Code] Raytest Util

Another way of thinking about it is that Arrays are actually Hash tables using a really simple hashing algorithm that is:

hash(N) => N

Have a good weekend!

-

RE: [Code] Raytest Util

@aerilius said:

@anton_s said:

I never thought Hashes were faster than Arrays.

I never thought Hashes were faster than Arrays.

Ruby saves developers from using primitive data types how data is stored in RAM, but it has more abstract, optimized data types.

Arrays are likely implemented as a double linked list (correct my if I'm wrong) and hashes as some sort of trees. You can see in Wikipedia what each of them are good at (see indexing).Ruby Arrays are simply contiguous sequences of VALUES - no linked lists here.

Hashes are just Arrays - just not using an integer index to access but the digest of an arbitrary "Key".

So a bit like:array["my key string".hash] = a_value array[123.hash] = a_valueHash tables take care of the generated key being larger than the array (aka Table) and also collisions (2 keys generating the same hash) - but essentially its just an array.

Hash tables have no "ordering" - its a Set or more properly a Collection since Values are not unique.

Aerilius, you may be thinking of Ordered-collections which can be implemented using a tree (red-black trees being a common example).

-

RE: [Code] Raytest Util

@oricatmos said:

@aerilius said:

I really see no advantage of having an imperfect built-in (or Ruby plugin) raytracer, while there are already so many dedicated renderers with better illumination models, more speed and more realistic output.

Yeah, I guess the raytest function would need to be able to take an array of rays and do multithreaded casting to be comparable to an external raytracer. By the way, we're doing room acoustics simulation, not visual rendering, with our plugin.

The wavelength of light is small so mostly you can ignore diffraction effects and treat light as moving in straight lines. Audio wavelengths are much longer and have significant diffraction around corners (hence you can hear round corners!), so how does using (straight line) raytracing help here?

Just interested..

Adam

-

RE: A Mobile Viewer That is Actually Useful

Doing CAD-like operations on a tablet poses some challenges. Hence the SU Ruler tool interaction style just wouldn't work on a tablet.

Looking at the use-cases for LightUp iPad Player, I decided to implement a "Laser measurement" style interaction of holding your finger over a surface triggers sending out a perpendicular ray until it hits another surface. It works, its simple and it covers common use-cases.

Having a general measurement tool would be more difficult - not sure it could be justified..

Adam

-

RE: A Mobile Viewer That is Actually Useful

LightUp iPad Player supports the Dynamic Components LightUp supports - so opening doors should work fine.

It also supports Scenes and you can toggle face outlining too.Adam

-

RE: The size of things.

On a related note (on ruddy enormous objects), this is an amazing factoid:

-

RE: [Code] Raytest Util

Just did a very quick'n'dirty test between Ruby raytest and LightUp raytest and its not terrible under SU2014.

Raycasting a simple scene:

Ruby: ~250,000 rays/sec

LightUp: ~2,000,000 rays/sec (but in real usage its multi-threaded so more like 10,000,000 rays/sec) -

RE: LightUp Analytics wins competition

Yes, LightUp Analytics is geared toward compliance and analysis and includes all the regular LightUp functionality along with:

- Clear Sky (15 CIE standard skies)

- Daylight

- Sky Factor

- Vertical Sky

- Daily Insolation

- Daily Sunlight

- Monthly Insolation

- Monthly Sunlight

- Winter Insolation

- Winter Sunlight

- Annual Insolation

- Annual Sunlight (APSH)

-

RE: A Mobile Viewer That is Actually Useful

As you know its not possible to screen capture an iPad, so its going to be a faff to film it.

You could just download the free iPad Player and have a go. Just hold your finger over a surface to activate the measuring to an opposite facing surface.

Adam

-

RE: [Code] Raytest Util

It's fast enough for what it was designed for - which is casting some rays into your model to orient/locate GUI elements.

No, it is not suitable for raytracing etc where you'll be casting millions of rays.

Yes, it uses a spatial structure to accelerate queries.