Kemp's Outpost

-

Prima !!!!!

-

@unknownuser said:

As seen from the comparison images from SketchUp and Thea Renders, there’s much detail that gets lost. When the scene’s center has geometry further off, Diffusion seems to get a bit confused as if it’s near sightedness works great for what is directly in front of it but that distance seems to confuse the AI. So, simple objects, centered and close produce rather (or more so) reliable representations while distant objects produce scattered or blurry results. But, even with something as simple as a round, circle port window, Diffusion translates the geometry into something seen in a Salvador Dalì painting.

A very accurate description of the diffusion model currently. A bad dream type of detail in my opinion.

I think its good to concept but not suitable to be used as final output. It can only get better to the detriment of those that spent years perfecting their skills.

-

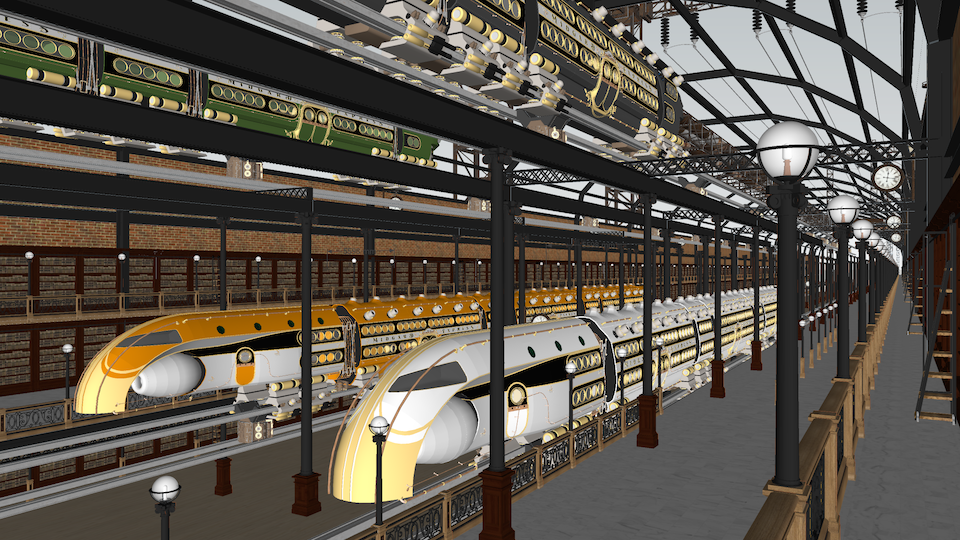

Steampunk Station Scene 4

Reference: Thea Render and SketchUp Screenshot

Diffusion:

-

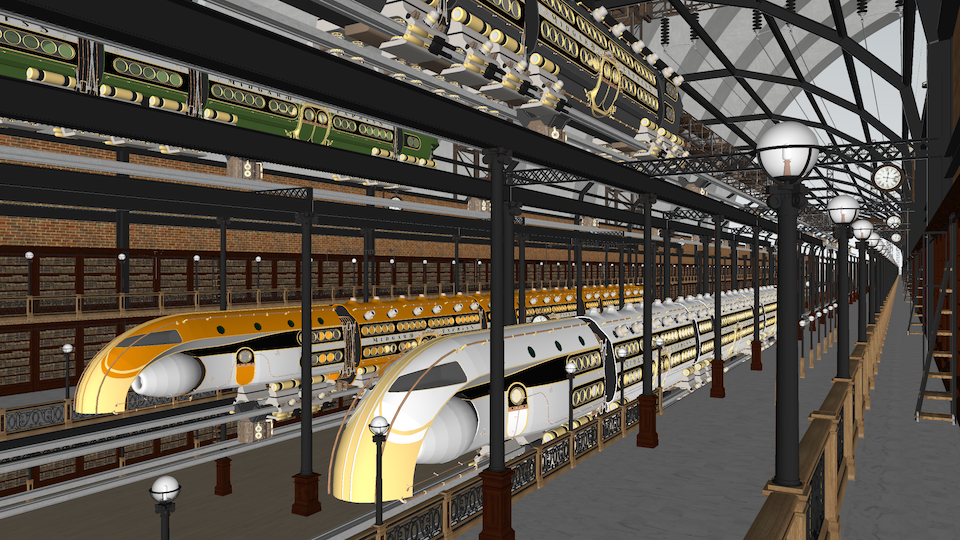

Steampunk Station Scene 8

Reference: Thea Render and SketchUp Screenshot

Diffusion:

SCENE 08 B

Reference: Thea Render and SketchUp Screenshot

Diffusion:

-

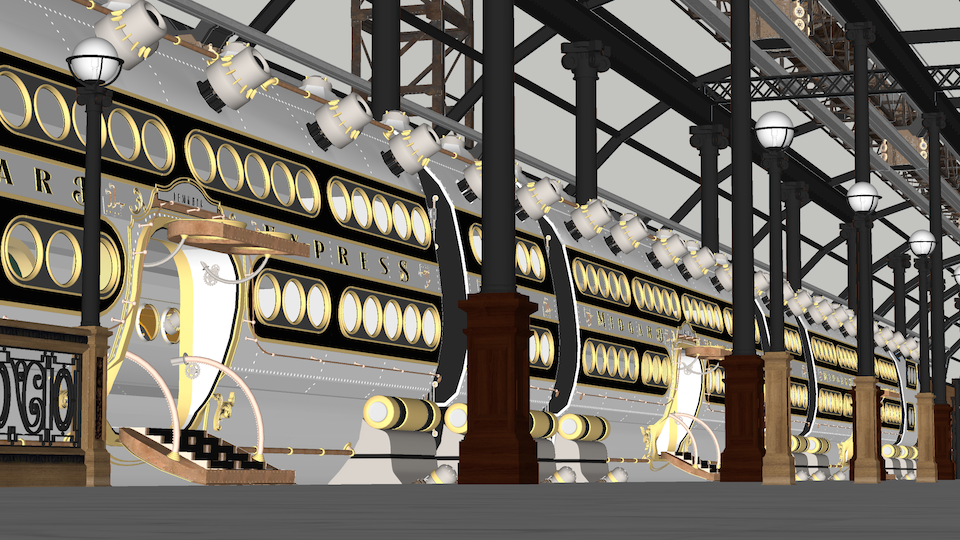

Steampunk Station Scene 14

Scene 14 A

Reference: Thea Render and SketchUp Screenshot

Diffusion:

SCENE 14 B

Reference: Thea Render and SketchUp Screenshot

Diffusion:

-

Steampunk Station Scene 18

Scene 18 B

Reference: Thea Render and SketchUp Screenshot

Diffusion:

Scene 18 A

Reference: Thea Render and SketchUp Screenshot

Diffusion:

-

Thank you for kudos. Much appreciated.

-

Now, that's a massive model

-

@rich o brien said:

@unknownuser said:

But, even with something as simple as a round, circle port window, Diffusion translates the geometry into something seen in a Salvador Dalì painting.

A very accurate description of the diffusion model currently. A bad dream type of detail in my opinion.

I think its good to concept but not suitable to be used as final output. It can only get better to the detriment of those that spent years perfecting their skills.

@Rich, I agree!

I spent three days looking at over 1000s of variations, selected a hundred+ of those I thought were reasonable as the others were just NUTS! Then, from those, my family helped in a day selecting their favorites which I weeded down to those the family mutually agreed on and a few of my own favs.

But, even though this is not my best Thea Rendering effort, which wasn't the point of this exercise, preparing the materials alone for render took three days with edits and test with two days Just to make 10 quick renders? Part of me is screaming, "not fair" while the other part is crying that the world don't care one way or the other and this is the future as this tech improves. For myself, I see this as the DJ that took bands out of bars, concerts, weddings and parties by owners that could have a night of music at 1/8th the price or more!

However, before I ranted too much in public on the issue, I knew as well I needed to immerse myself in some practical tests to wrap my head around the subject enough to have an opinion, thus these tests. Alas, the future looks bleak, exciting fun and cheap. I guess those who are celebrating are those who can work faster and cheaper for those who discern less for quality, talent and experience... with the exception, as you mention, for concept work. If I can vomit out an idea to get an agreement for work to be done, than I have no moral issue with using this tool, as it does that very well. But, with this tech in the hands of newbies who have no understanding of the challenges with getting realistic results, expectation of clients will change as well, accepting the one click solution as equal to the sweat, experience and passion of rendering they had to "pay" for. I may be a bit slanted or prejudiced but, I think I'm being rather realistic in my assessment as well.

As we saw digital music replace musicians, the more this tech advances, I believe we will (or are) seeing the mortality of the technical expertise of the artistry and profession we've known. The day that AI produces "controlled" work in perfection under direction (or automated), is the day that the masters' and gurus' end has come in this game.

LOL! And all that after all this "fun" becoming familiar with the tool. I believe you and others who share this perspective, are spot on.

-

I think that no matter how good it gets it will always lack imagination.

People still draw and paint despite disruption with digital media.

I'm optimistic about it. Rock'n'roll is here to stay

-

I sure hope you're right!!!!

Please be right.

-

There are several areas in which AI is much better than normal render engines: these are textiles! I've decided to render architecture classically and render textiles in Skechup diffusion and then mix the two together in Photoshop.

In your case it's about architecture. rendering is better than diffusion

-

Thanks for doing these comparisons.. very cool to see.

-

@jo-ke said:

The light is often better in diffusion.

Yeah, in those few cases in which the sun isn't casting shadows in 4 different directions, maybe..

-

@marked001 said:

Thanks for doing these comparisons.. very cool to see.

Glad you appreciate them for that reason. Thanks.

-

This would be a great article on the site here...

https://sketchucation.com/forums/viewtopic.php?p=695131#p695131

Its useful, informative and honest feedback from a power user's viewpoint

It reminded of another I read last month on the topic of 3d rendering and AI

-

Just read the article. Yes, he sums up rather well the advantages and weaknesses, especially that of control.

Good for initial concept ideas, but... not a solution for "on purpose" projects. -

I have just spent several minutes smiling like a loon. Thanks mate.

-

@mike amos said:

I have just spent several minutes smiling like a loon. Thanks mate.

Loon: North American waterfowl, primary character from "On Golden Pond" that forebodes doom and gloom.

Loon: a relative of Daffy Duck and/or Woody Wood Pecker, characters that laugh at doom and gloom.

In either case, I assume that is a positive thing.

-

@duanekemp said:

not a solution for "on purpose" projects.

A couple of weeks ago, while I was modeling that compost turning machine you probably saw on sketchup facebook page, a friend came out with one of those "magic" AI modeling website..

He prompted the robot to produce "A square sofa pillow with white and red stripes" to put it in a rendering.

The robot proposed 4 different (half decent) pillows and he was like "You see? You can use this stuff for actual work".So I was curious to test it for my "actual work" and prompted in more or less the following (which were the exact requirements for my animated model) just to see to what extent it could be "useful":

I need a self-propelled compost heap turning machine, about 3 meters tall and 4 meters wide.

It should consist of 3 draw calls, the first one for the main body, the second one for the animated tracks and the third one for the animated roller.

The main material should be orange paint with compost splats coming from below and the "AMIU Puglia S.p.a" logo on the back of the cabin.

The textures should be packed for Unity HDRP metallic-smoothness PBR shader.

I need the UV chart to be split in 2 different UDIM tiles, a 4k set for the main body and a 2k set for the animated parts.

It should be rigged to follow a spline and I need constraints on the tracks and the roller in order to follow accordingly whenever the model animates along the splineThe robot proposed 4 different machines similar to a coffee grinder (static models, with only base color map, about 1m x 1m x 1m large, with no tracks or roller whatsoever).

Two were blue and two were green.

None of them was at least orange.So yeah.. that's it

Advertisement