@TIG: That assumes that the cameras in ACT use only the existing ruby classes. Is that the case, or did they create new classes with extended functionality?

(I'm very familiar with the API docs  )

)

@TIG: That assumes that the cameras in ACT use only the existing ruby classes. Is that the case, or did they create new classes with extended functionality?

(I'm very familiar with the API docs  )

)

Is there a ruby API for plugins to access the camera information?

Most of what I would add has been said already.

I'm a proponent of C++. For me, it's a much more natural programming structure than C (being object oriented), but you should probably pick what you are more comfortable with. If you use a lot of modules and classes in Ruby, I think you'll find the transition to C++ much more logical, with namespaces, classes, etc.

Visual Studio Express 2008 is great for windows machines. I've had years of experience with VS so I can't really say how easy it is to just jump into, but I think it's as easy as most other IDE or compile environments.

As far as cross-platform, if you are planning on any kind of GUI, I strongly suggest a good cross-platform framework, like wxWidgets. Not only does it add tons of GUI support but there is a lot of stuff you may not think about being cross-platform, file io, networking, images, etc.

But even with a cross-platform framework, switching from Win to Mac is rarely straightforward. Be prepared to make some specialization.

Last, don't underestimate the garbage-collection issue. If you have a single C/C++ method in which you make many ruby calls:

void my_method(VALUE something)

{

VALUE val_a = rb_funcall(something, rb_intern("blah_blah"), 0);

VALUE val_b = rb_funcall(something, rb_intern("blah_blah2"), 0);

VALUE val_c = rb_funcall(something, rb_intern("blah_blah3"), 0);

At any point, ruby can "collect" and delete the ruby objects, regardless of whether you are still in your C method. Ruby doesn't know that you are still in the function you were in when you asked for the object. So if these are transforms, and you go to multiply val_c by val_a, you may very well find that val_a has been deleted before you even get to val_c. I suggest understanding how Ruby does it's mark-and-sweep garbage collection and work with it.

It would be great if the MaterialObserver 'onMaterialChange' would trigger in event of any change to the material (color, alpha, texture scale, etc). If that's not possible, an additional method that did it would be nice.

@adamb said:

No, it means SU sends 3-component texture coordinates to OpenGL and allows OpenGL to interpolate and divide at each pixel.

Just as Adam says, OpenGL provides methods not just for 2 coordinates (UV), but 3 and even 4. glTexCoord3f, glTexCoord4f

@unknownuser said:

I have never received false negatives by checking the 1.0 with enough precision.

Really? That's good to know. That's a much faster test.

As far as why some projected textures aren't distorted, I think Al and thomthom are both right, given the respective circumstance. It could be that the texture is not truly 'distorted' as Al suggest; it could be that the level of subdivision is high enough that you just don't see the distortion, like thomthom said.

And Tomasz hit it right on the head as far as using tw and uvhelper; you have to make sure you are using a tw 'loaded' with the face in question, before using it in the uvhelper.

thomthom, you asked about how unique textures are made. I don't havethe exact code, but here are a couple places to start:

TextureWriter.write( face, filename, [true - front face / false - back face])

Face.get_UVHelper( front_face_coords, back_face_coords, texture_writer)

UVHelper.get_front_UVQ / get_back_UVQ

I think you can actually use this on every single face, even non-distorted, but you'll likely overwrite the same texture over and over and over, which is slow to say the least. So you'll want some management to track when to write a new image.

@thomthom said:

I'm still wondering if this is the only way though. Or maybe the SU way simply isn't compatible with the UV co-ordinates of most other apps. How does other 3D applications map textures? Generally.

My suspicion is that it is the only way. I think most applications use the barycentric on triangle method because it's very fast. You can precalculate almost all the information, so for any point within the triangle, it's a simple and fast function to translate 3D point into a UV image coordinate. Is there an app out there that actually use UVQ instead of UV, potentially supporting distorted tex? No idea.

In my opinion, Whaat makes a pretty good explanation. The uv_helper / texture_writer combo is the key. The texture writer is used to produce the pre-distorted texture and the uv_helper returns UV coordinates that are specific to mapping that texture to the face. These are (potentially) different coordinates from the ones you receive directly from the PolygonMesh. I don't know the code off hand, but I do know that Tomasz has been doing this for quite some time; he may have been the first.

As for detecting when textures are distorted... I agree that you will very often see 'z' or 'q' (UVQ) values that are not 1.0, but I think you can get false negatives that way. Meaning, you can have distorted textures where the Q is not any value but 1. (This is speculation only)

@thomthom said:

If you write out unique textures for every face, then don't you have to discard the UV data from SU? Because the unique texture would come out regular, but the one SU refers to with its UV data is distorted. Or am I misunderstanding something.

I think you've got it dead on. The uv_helper and texture_writer work together; one produces the new uv, one produces the image. This doesn't effect the existing scene in any way. The uv_helper produces UV values that match the pre-distorted image, but they don't change the existing SU model. So the resulting image is unusable in the original model; the image no longer matches up with the original UV. You could replace the existing UV and the existing texture, but I think what you've basically done is recreate the "Make Unique" function.

I didn't see an actual answer in Al's post. Maybe I just didn't understand it?

@Jeff: Maybe because everything is already triangles, the UV coordinates that SU generates are 'compatible' with the traditional triangular UV techniques employed by other applications. So what you see in SU, is what you get in the render. Of course, it could just be how UVTools does the sphere mapping that makes it more compatible?

@richcat said:

IRendernxt straight render in sketchup, you'll have to ask Al how?

You are more than welcome to ask. I won't be surprised if they use the same "pre-distorted" image technique that the SU exporters use.

http://en.wikipedia.org/wiki/Barycentric_coordinates_%28mathematics%29

Maybe some thoughts. Barycentric on a triangle is a very common application. But check out the Barycentric on a tetrahedra (barycentric in 3D). Maybe it's how SU uses it?

@thomthom said:

So question is: how can SU's data be converted into a format that most renderer's and external program uses?

The fact that all of SU's built-in export methods use the "pre-distorted", unique texture method, my guess is that it can't be done (unless the renderer itself supported the same interpolation technique).

@thomthom said:

It only creates a unique texture for the distorted? Not for the others?

An export to Collada for the entire scene produces 1 undistorted texture and 1 distorted texture.

@frv said:

Maxwell renders distorted textures just fine.

Francois

Maxwell can render SU files directly? Or does it import .obj, .3ds, .dae? Because if it just imports, it's as was discussed above: SU produces "pre-distorted" images. The render is just using the pre-distorted image, which any renderer can.

I just tried an export with Collada and was amazed that, despite the fact that the UV coordinates were very simple, it somehow was distorting it correctly.

Well, turns out, the export produces an output texture that is "pre-distorted"

Is that what the .3ds export does? That may be the only way to do it, to distort the texture image rather than have the render app try to reproduce the mapping technique...

Huh. The .3ds format only supports triangles (I think), so there is a way to represent the texture interpolation used by SU using only 3 UV coordinates (and the .3ds export knows it! )

This is definitely a curious problem. Here's some thoughts on it.

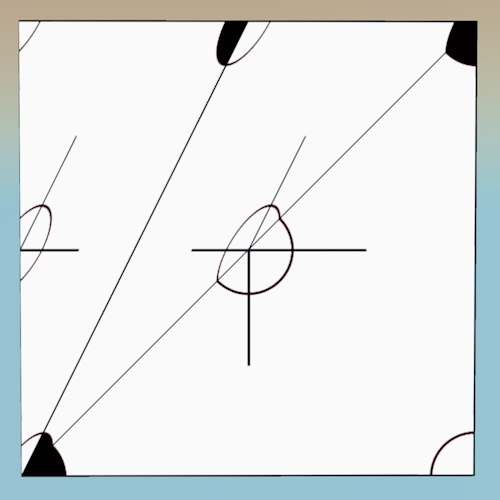

This is an image from Twilight with the edge line drawn where Twilight triangulates the quad. It's quite obvious that the distortion moves in different direction on either side of the split. But what's important to notice is that the exact corners are not 'wrong'. As I'm sure you know, texture is mapped via UV, and those UV coordinates exist only where there are geometric vertices. They are basically anchor points for the texture, and here, the anchor points are correct. What is wrong is how the texture is interpolated between anchor points.

I think the difference is that SU uses a kind of 'quad' interpolation for textures, using four anchor points (even when the face is 3 or 5 or whatever sides), whereas Twilight, and probably many other applications, interpolates textures using only 3 anchor points because the math is fast (which makes for faster renders!). If so, this makes it a fairly fundamental difference that will be difficult to correct for.

What surprises me the most is the SU exports, at least in .3ds, in such a way that it's interpreted correctly in Vue. Is it Vue, or is it the export process? I'd be curious to see an export to .3ds and rendered in another app, like Kerkythea.

Anyway, I could be mistaken about the whole thing, but it seems a possible scenario.

Is there a way to embed youtube videos?

[flash=500,300:1e9ot4b4]http://www.youtube.com/watch/v/bpaZjdIZEW4[/flash:1e9ot4b4]

Fixed by solo

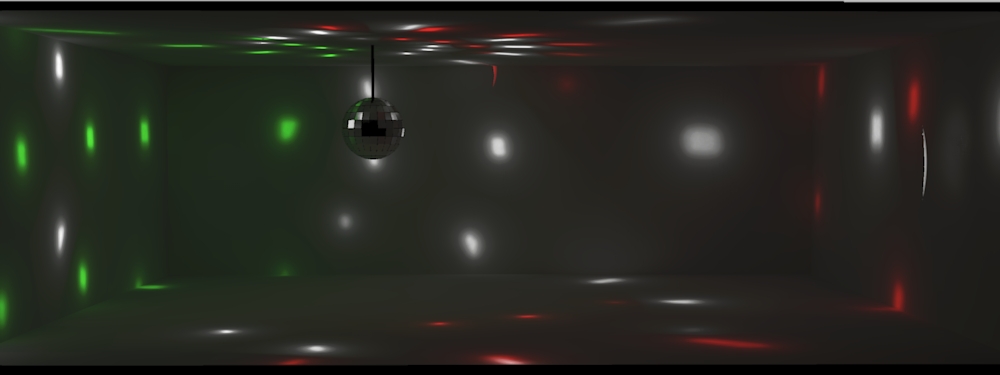

Well, I'll throw out the first image.

I used four lights, and didn't target the center of the ball, but somewhat off-axis. PM-FG rendering in Twilight (High+).

A tip for Twilight users: In order to capture the light bouncing off the ball, you need to have Caustics enabled. The Low, Low+, Medium, and Medium+ settings use pseudo-caustics, an approximation of caustics. That won't work for this scene. You'll need to use high or high+, or the Interior + progressive renders (the Exterior progressive doesn't use full caustics and Interior (no plus) doesn't produce caustics from spotlights or pointlights).

That's a really neat concept. I hope to see what you make of it. Definitely a lot of vegetation, some water, mixed with some ultra-modern black and chrome, that would be cool.