Any SU render engines that renders distorted textures?

-

@unknownuser said:

why are these working?

i was under the impression (and i'm almost positive i read this from whaat) that projected textures won't render properly..

are these not distorted?

there's only one texture in the model and no exporting of individual faces happenedIt is quite possible that these images are not actually "distorted". There are four handles which can be used to position textures. (These are explained at the beginning of this thread). Three of them move, stretch, rotate and skew the image. Most normal UV mappings consist of only these four things. Many strange looking mappings are only stretched and skewed. Only the upper right handle "distorts" the image. A distorted image cannot be easily defined by 4 UVQ values at the corners of the face. (Distort is a term we use to mean "even worse then skewed". So many images which seem distorted to us are only stretched and skewed.) (Remember normal UVQ mapping can be used to display most projections of images onto spheres and curved surfaces which we see in most modeling and rendering packages.)

Instead, SketchUp performs a more complicated mapping of the image, saves an already distorted image for us on the disk, and we can them map the image to the face with default UVQ values.

-

why are these working?

i was under the impression (and i'm almost positive i read this from whaat) that projected textures won't render properly..

are these not distorted?

there's only one texture in the model and no exporting of individual faces happened

[i'm pretty sure i project these in the normal sense of projecting a SU texture..

import file as an image.. line it up with the object.. explode the image.. with , hold the cmmd key (mac) and sample the imported texture.. paint on the object i want the image projected onto][EDIT] nvm about what i thought whaat said.. i was thinking about this thread i read a while ago

http://www.indigorenderer.com/forum/viewtopic.php?f=17&t=7036

the dude called it a projected texture but i d/ld the model and it was in fact distorted (as dale said)

[edit2] well, i dunno.. he did say skewed via projection in there

-

Al... I really don't get this or any of your explanations...

What I can see is that your application is also making the texture unique...

Your application and also Hypershot does the same as everyone else...

They just export the texture special for each distorted surface...

-

@al hart said:

[

It is quite possible that these images are not actually "distorted".yeah Al, i think you're right.

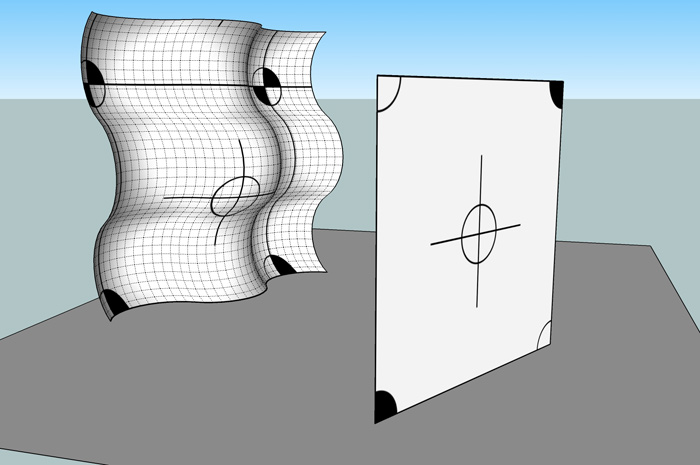

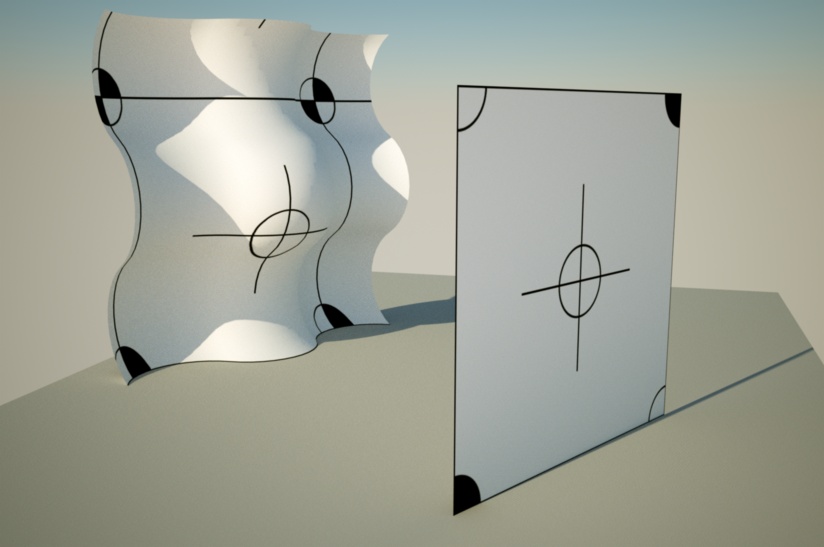

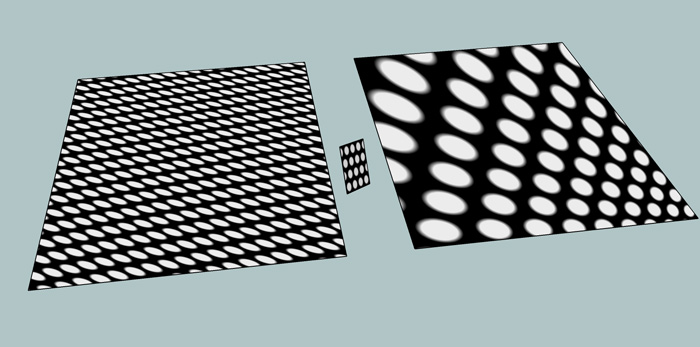

i tried setting up a texture to be projected onto a large flat plane coming in from a weird angle.. the resulting projection should be distorted but instead, it hits then tiles itself...for the attached picture... i projected onto the left surface from the little square in the middle assuming it would give results similar to the square on the right (which is distorted) but it doesn't work out that way..

-

Thea Exporter deals with the sample well.

-

Tomasz - are you sure that the distorted texture isn't being exported as a seperate (unique) texture...??

-

@chris_at_twilight said:

As for detecting when textures are distorted... I agree that you will very often see 'z' or 'q' (UVQ) values that are not 1.0, but I think you can get false negatives that way. Meaning, you can have distorted textures where the Q is not any value but 1. (This is speculation only)

I have never received false negatives by checking the 1.0 with enough precision. The posted TheaExporter sample uses TextureWriter and UVHelper. They work in tandem. The texture writer will create additional ('virtual' unique) texture only when necessary. Sometimes TW can create just a single 'virtual' unique texture for two faces that are parallel to each-other an share same distorted texture.

I have spent many hours to understand how the TW works. There is no point in writing every single face to the TW, because it will take a lot of time. I recognize first if a texture is distorted Q!=1.0.if ((uvq.z.to_f)*100000).round != 100000If it is, it goes to TW, which usually leads to a creation of a unique texture. If it doesn't one have to read the 'handle' returned by the TW. It points to the position of a texture in TW 'stack'.

It would be interesting to learn how SU DEVELOPERS use additional Q coordinate to add perspective distortion to a texture. I have done a search and haven't found any other software using this type of projection. I hope Google staff would share the trick with authors of redering engines so a use of TW, which is creating additional textures == materials in a renderer, won't be necessary.

I have implemented also a method without UVHelper using uv=[uvq.x/uvq.z,uvq.y/uvq.z], but the key is actually to learn how SU applies the projection. As far as I am aware no rendering software uses UVQ.

@frederik said:

Tomasz - are you sure that the distorted texture isn't being exported as a seperate *(unique)*texture...??

The method uses TW which crates 'a second texture'==additional 'Thea material'. I have not found a model so far that gives me wrong projection. Unfortunately the method forces creation of multiple Thea materials.

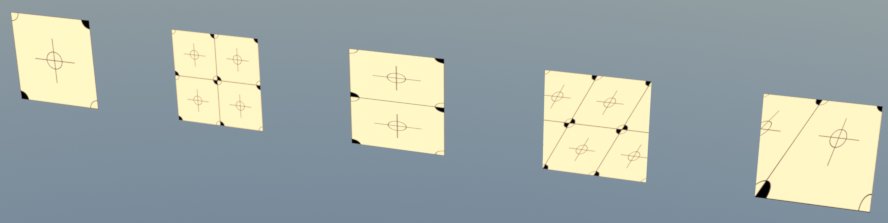

EDIT. I modified Thomas' test model. I have added two additional things - a group with a default mat painted face and a second face with distorted texture which will have same handle in the TextureWriter as the one right bellow.

-

@unknownuser said:

why are these working?

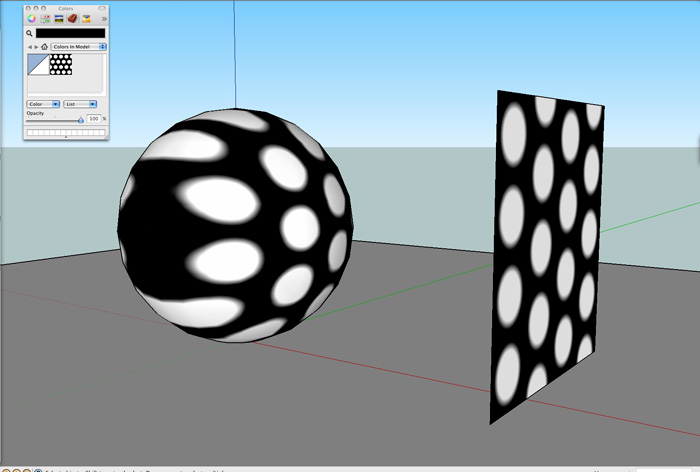

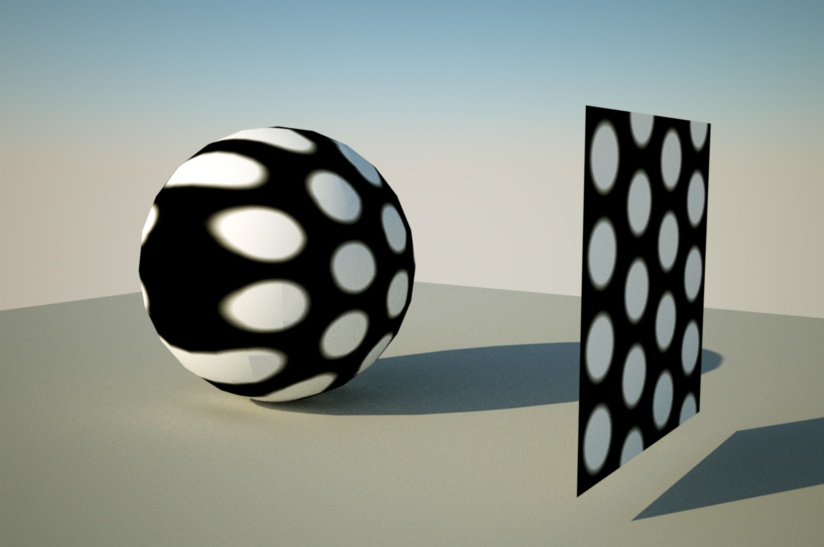

Maybe you curved surface is subdivided into so many smaller faces that deviation isn't visually noticeable?

-

Tomasz: When you discover a distorted texture (from the Q value), and use TW to write out a unique material;

- What UV values do you send to you renderer?

- If the render material has a bumpmap applied to it - how is that handled? Won't you end up with mismatching bump?

-

@unknownuser said:

The method uses TW which crates 'a second texture'==additional 'Thea material'.

Yeah - that's what I thought...

This is - again - how everyone else is able to handle it...

However, I believe thomthom is trying to find out if this can be handled differently...@thomthom said:

Yea, Make Unique generates a new texture and gives the face new UV co-ordinates.

What I'm trying to find out though, if there is any renderers that actually handles this. More importantly, I'm interesting in

howthey handle it.IMHO, I hardly never see distorted textures used and thereby it has never been a great issue, certainly not a dealbreaker...

-

@thomthom said:

- What UV values do you send to you renderer?

When all textures has been loaded to TW I use:

uvHelper=face.get_UVHelper(true, false, tw) point_pos=mesh.point_at(p).transform!(trans.inverse) #trans='nested transformation' of the parent #thanks to Stefan Jaensch for figuring out that this trans has to be inverted and applied here uv=uvHelper.get_front_UVQ(point_pos)@thomthom said:

- If the render material has a bumpmap applied to it - how is that handled? Won't you end up with mismatching bump?

I use same UVs. I assume that the bumpmap is the same size as the main texture.

-

@unknownuser said:

@thomthom said:

- What UV values do you send to you renderer?

When all textures has been loaded to TW I use:

uvHelper=face.get_UVHelper(true, false, tw) > point_pos=mesh.point_at(p).transform!(trans.inverse) #trans='nested transformation' of the parent > #thanks to Stefan Jaensch for figuring out that this trans has to be inverted and applied here > uv=uvHelper.get_front_UVQ(point_pos)@thomthom said:

- If the render material has a bumpmap applied to it - how is that handled? Won't you end up with mismatching bump?

I use same UVs. I assume that the bumpmap is the same size as the main texture.

where do the

pvariable come from? -

@thomthom said:

where do the

pvariable come from?It is an index(starting from 1) of a point in a PolygonMesh. You get the Polygon mesh from a

face.mesh #.

You probably can get point position in other way. -

Also, why don't you use

uv = mesh.uv_at(p, true)? Appears it would give the same result. -

If you use my Probes plugin: http://forums.sketchucation.com/viewtopic.php?f=323&t=21472#p180592

Press

Tabto see raw UVQ data

By default it will useUVHelperto get the UV data

Press F2 to make it sample the data from thePolygonMeshFrom my testing, the data never differs.

UVHelperseem to be made to sample UV data from aFace.

But if you have aPolygonMeshof theFace, it includes the UV data (provided you asked for that when you usedface.mesh). -

@thomthom said:

Also, why don't you use

uv = mesh.uv_at(p, true)? Appears it would give the same result.I simply use UVHelper because it seems to be designed to this task. What would be the purpose of it otherwise?

I am not so sure if it would give same result for two distorted faces sharing same distorted texture (the last pair in the modified skp test scene).BTW. I have found that there is some memory leak when using UVHelper, at least those objects are not being dumped well (rubbish collector). In my exporter I stay away from UVHelper as long as I can. I read uv coordinates in all other scenarios using

uv_at. -

@thomthom said:

If you use my Probes plugin: http://forums.sketchucation.com/viewtopic.php?f=323&t=21472#p180592

Press

Tabto see raw UVQ data

By default it will useUVHelperto get the UV data

Press F2 to make it sample the data from thePolygonMeshFrom my testing, the data never differs.

UVHelperseem to be made to sample UV data from aFace.

But if you have aPolygonMeshof theFace, it includes the UV data (provided you asked for that when you usedface.mesh).Ok. Try this. Just load a face with distorted texture to the TW, then you will see the difference if you will use same TW to get uv from UVHelper.

What you have written is true as long as you use blank TW. It gives coordinates in relation to the original 'picture', but when you load a face to TW then it 'makes it unique inside TW' therefore uvs change.It looks like this thread would better fit in the new developers section

-

@unknownuser said:

I have never received false negatives by checking the 1.0 with enough precision.

Really? That's good to know. That's a much faster test.

As far as why some projected textures aren't distorted, I think Al and thomthom are both right, given the respective circumstance. It could be that the texture is not truly 'distorted' as Al suggest; it could be that the level of subdivision is high enough that you just don't see the distortion, like thomthom said.

And Tomasz hit it right on the head as far as using tw and uvhelper; you have to make sure you are using a tw 'loaded' with the face in question, before using it in the uvhelper.

-

@unknownuser said:

if you will use same TW to get uv from UVHelper.

Use a TextureWriter to get UV data?

-

@thomthom said:

@unknownuser said:

if you will use same TW to get uv from UVHelper.

Use a TextureWriter to get UV data?

It is the required parameter:

uvHelper=face.get_UVHelper(true, false, **tw**)

Advertisement