Any SU render engines that renders distorted textures?

-

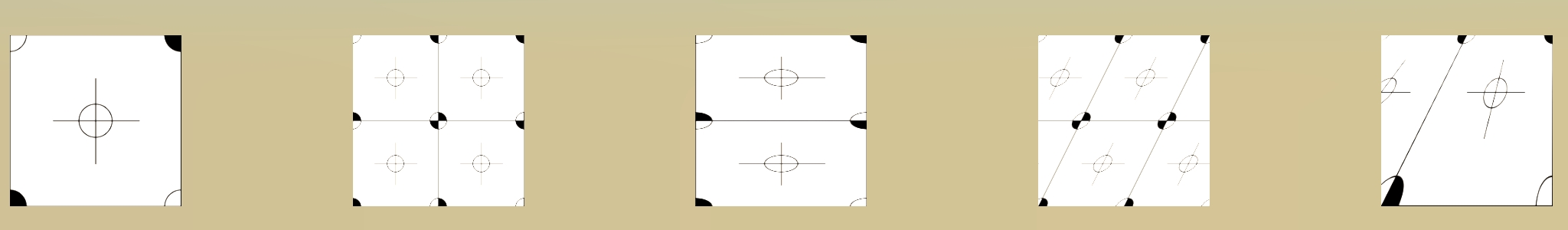

I just tried an export with Collada and was amazed that, despite the fact that the UV coordinates were very simple, it somehow was distorting it correctly.

Well, turns out, the export produces an output texture that is "pre-distorted"

Is that what the .3ds export does? That may be the only way to do it, to distort the texture image rather than have the render app try to reproduce the mapping technique... -

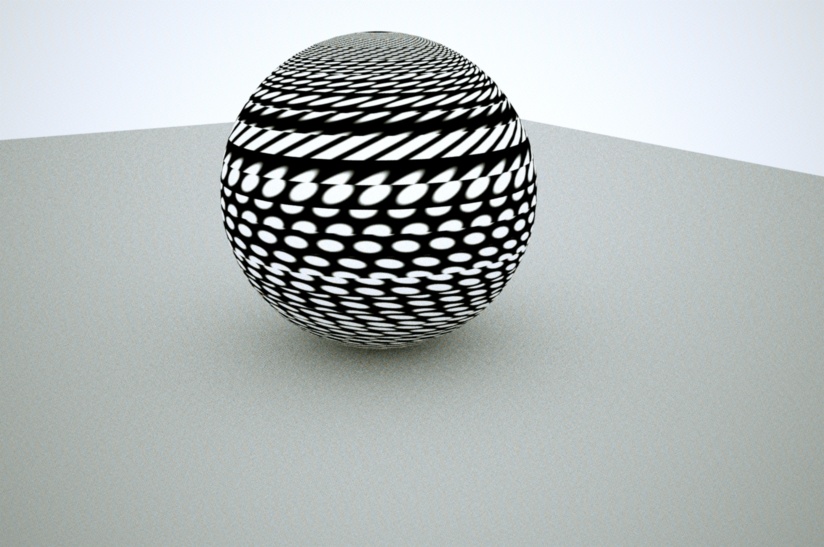

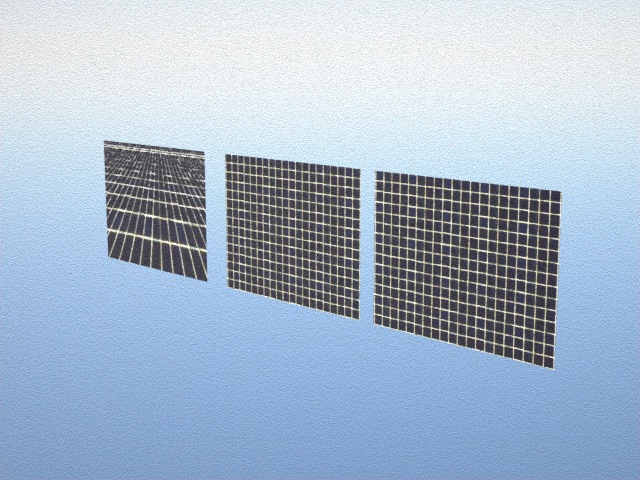

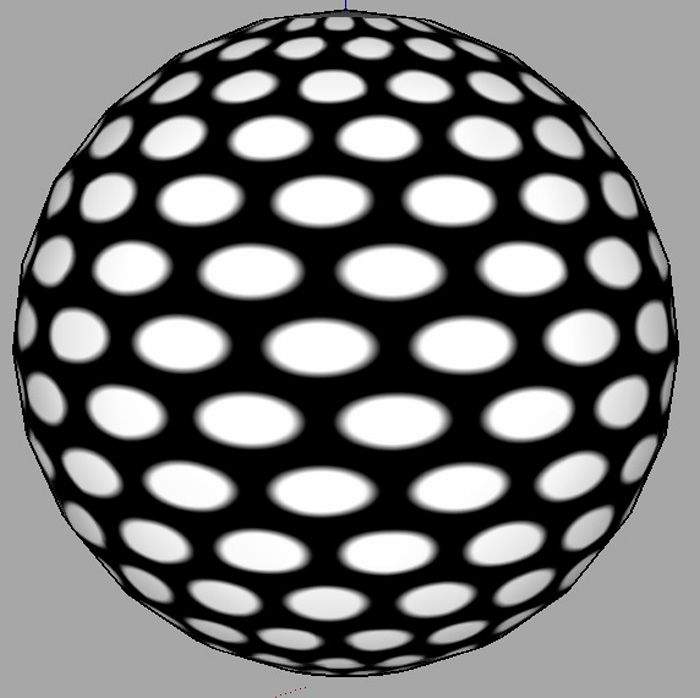

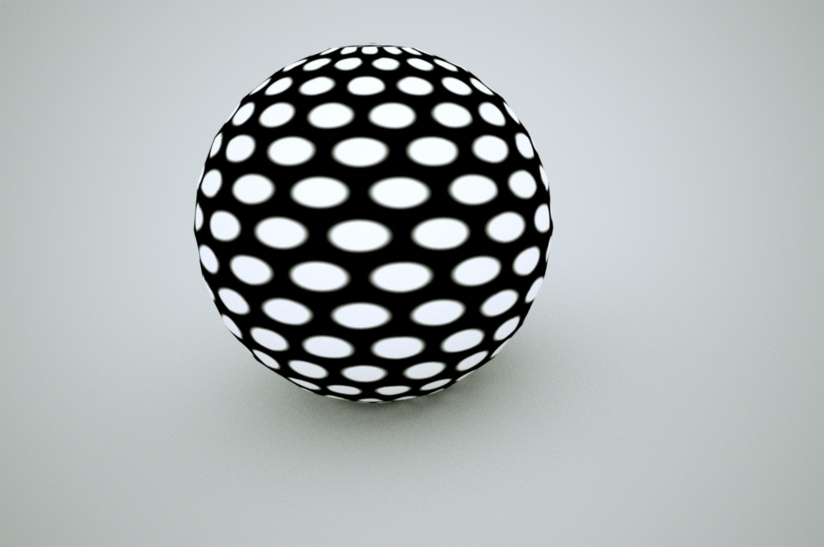

another quick experiment... (indigo)

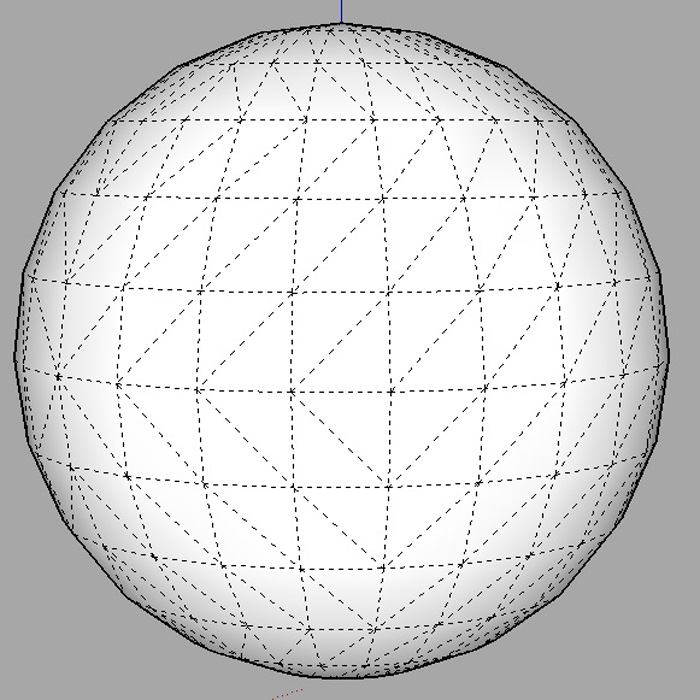

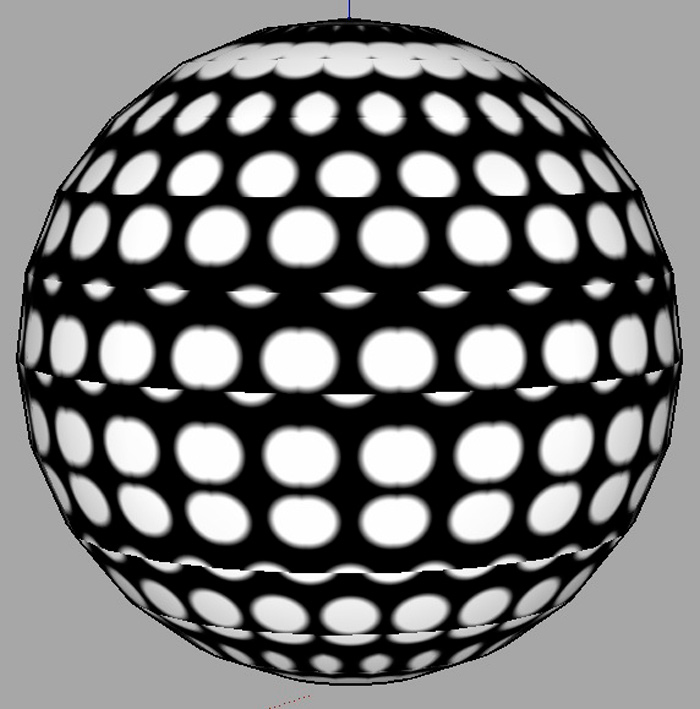

i made a sphere with quads, added a texture and used UVtools spherical mapping...

typical results when trying to render...

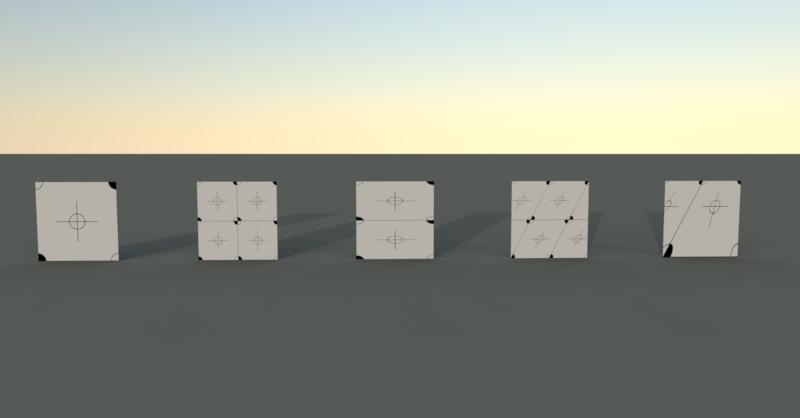

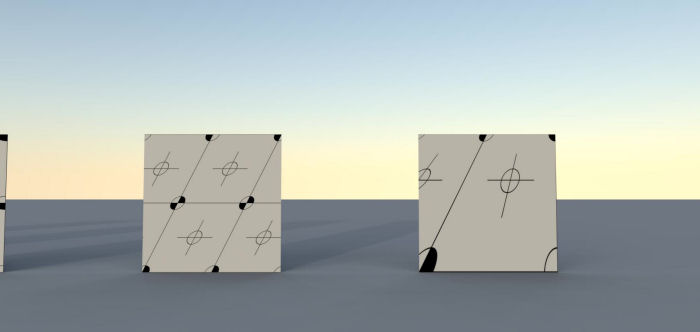

i then exported the sphere as a 3ds file and was wondering why it was taking so long.. well, 1100 separate jpgs were being exported to my desktop

(used two 48 segment circles for the sphere)

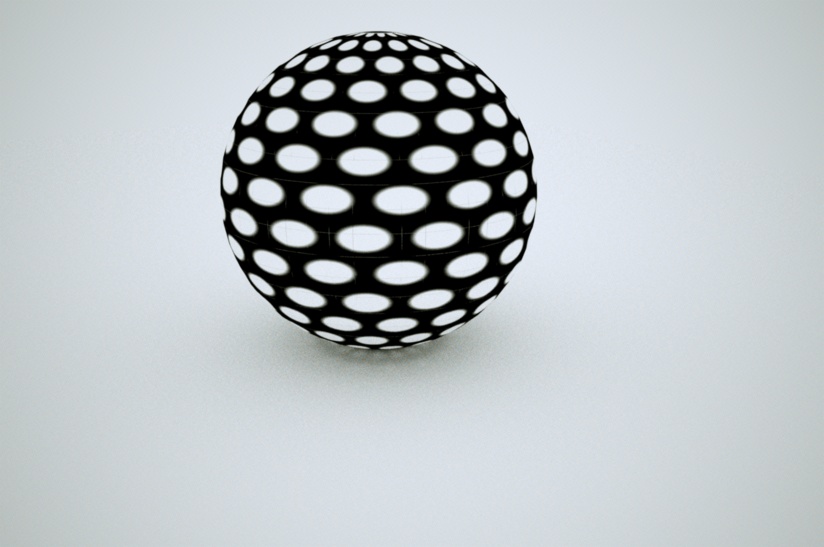

(used two 48 segment circles for the sphere)when i brought i back into sketchup, you could see the individual jpgs and you can see it in the indigo render as well.. (but it did keep the mapping correct)

.

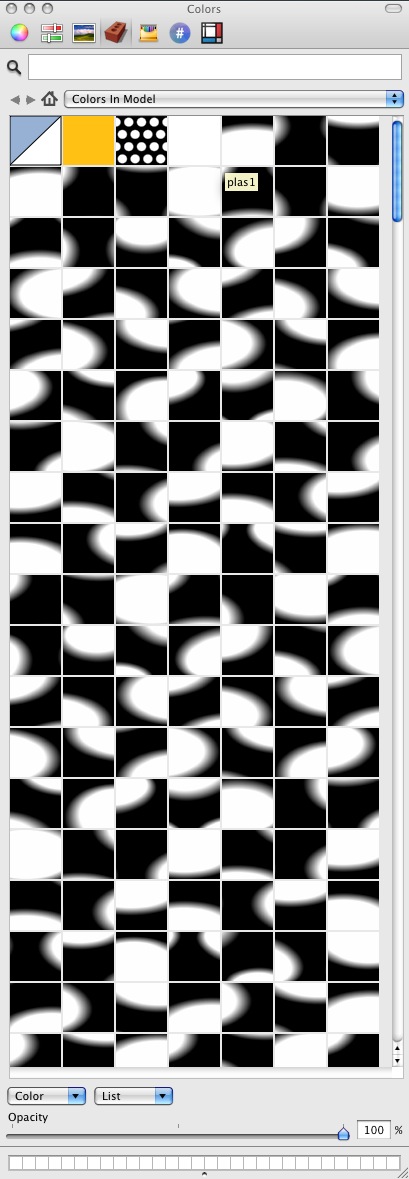

so i guess 3ds exporting is just making everything individual textures.. ?here's the material browser of the sphere after i brought the 3ds back in (note the scroll bar on the right side.. that's a very long texture list

-

@thomthom said:

I see. So Vue doesn't read the Su data directly...

What does Hypershot do?

There is a menu entry "Export to HyperShot" to open the scene inside HyperShot. In the HypeShot's installation folder there are executables XXXtoObj.exe for every supported file format - so, it converts everything to obj before rendering.

-

@chris_at_twilight said:

I think the difference is that SU uses a kind of 'quad' interpolation for textures, using four anchor points (even when the face is 3 or 5 or whatever sides), whereas Twilight, and probably many other applications, interpolates textures using only 3 anchor points because the math is fast (which makes for faster renders!). If so, this makes it a fairly fundamental difference that will be difficult to correct for.

Yes. This is what it seems to me as well from trying to make UV tools in SU. I found taking UV from vertices failed when it came to triangles and distorted textures.

I had to sample four points of the face's plane in order to get correct data set.

In SU, when you set UV mapping using.position_material, you don't set UV data per vertex, it only has to be UV data that related to points on the plane.But that had lead to problems when trying to use

PolygonMeshto sample, as thePolygonMeshonly returns UV data at each vertex. Meaning that I have not found any way to get correct data from that.So question is: how can SU's data be converted into a format that most renderer's and external program uses?

-

Important question:

If most other renders use 3 anchor points for UV mapping, how is a distorted texture defined? How can you define a distorted texture using only three points?

-

On side note Podium will render photomatch (distorted textures) after SU exports to 3ds and import back.

Tried LightUp and it also does not render correctly distorted texture. -

@sepo said:

On side note Podium will render photomatch (distorted textures) after SU exports to 3ds and import back.

Tried LightUp and it also does not render correctly distorted texture.That would be due to what Jeff described: http://forums.sketchucation.com/viewtopic.php?f=80&t=23947&start=15#p204562

That when exported to .3ds each face gets a unique texture - So when importing back you have lots of new textures where they then are not distorted any more. -

I exported the model to kmz and reimported it. Interestingly, it created a unique material of the distorted image by itself and this rendered correctly (Twilight in this case but I guess it wouldn't matter in this case of a unique texture)

-

It only creates a unique texture for the distorted? Not for the others?

-

Maxwell renders distorted textures just fine.

Francois

-

And how does Maxwell integrate with SU?

-

Hmm.. interesting programming discussion which seem to be about similar issue: http://old.nabble.com/Texture-map-a-polygon-td26394710.html

-

@thomthom said:

It only creates a unique texture for the distorted? Not for the others?

An export to Collada for the entire scene produces 1 undistorted texture and 1 distorted texture.

@frv said:

Maxwell renders distorted textures just fine.

FrancoisMaxwell can render SU files directly? Or does it import .obj, .3ds, .dae? Because if it just imports, it's as was discussed above: SU produces "pre-distorted" images. The render is just using the pre-distorted image, which any renderer can.

-

@thomthom said:

So question is: how can SU's data be converted into a format that most renderer's and external program uses?

The fact that all of SU's built-in export methods use the "pre-distorted", unique texture method, my guess is that it can't be done (unless the renderer itself supported the same interpolation technique).

-

That's my fear.

Would be interesting to know what texture technique Google uses. Might try to give some of the Googleheads on this forum a prod. -

http://en.wikipedia.org/wiki/Barycentric_coordinates_%28mathematics%29

Maybe some thoughts. Barycentric on a triangle is a very common application. But check out the Barycentric on a tetrahedra (barycentric in 3D). Maybe it's how SU uses it?

-

@chris_at_twilight said:

Huh. The .3ds format only supports triangles (I think), so there is a way to represent the texture interpolation used by SU using only 3 UV coordinates (and the .3ds export knows it! )

not sure if this will trigger any thoughts with you guys (and really, i don't understand much of what you're talking about) but..

if everything is triangles in SU prior to messing with the UV mapping then it will render correctly..

-

IRendernxt straight render in sketchup, you'll have to ask Al how?

-

@Jeff: Maybe because everything is already triangles, the UV coordinates that SU generates are 'compatible' with the traditional triangular UV techniques employed by other applications. So what you see in SU, is what you get in the render. Of course, it could just be how UVTools does the sphere mapping that makes it more compatible?

@richcat said:

IRendernxt straight render in sketchup, you'll have to ask Al how?

You are more than welcome to ask. I won't be surprised if they use the same "pre-distorted" image technique that the SU exporters use.

-

Yup, can confirm Irender aim and renders it correctly.

Advertisement