Any SU render engines that renders distorted textures?

-

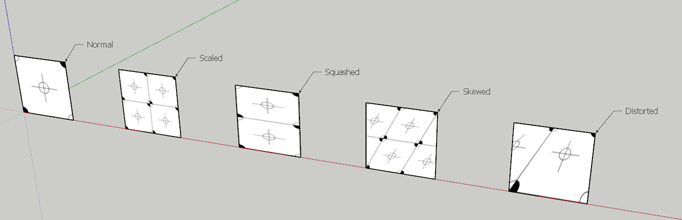

I'm wondering, is there any render engines out there that renders distorted textures correctly?

-

Can you give an example SU file. I will it try it for you in Podium and LUp.

-

-

Tried Podium. All of those render but distorted. However by making it unique it renders no problem.

-

@sepo said:

Tried Podium. All of those render but distorted. However by making it unique it renders no problem.

Yea, Make Unique generates a new texture and gives the face new UV co-ordinates.

What I'm trying to find out though, if there is any renderers that actually handles this. More importantly, I'm interesting in

howthey handle it. -

hm...

@unknownuser said:

This problem is rooted in the fact that SketchUp does not provide correct UV coordinates for distorted textures. Try rendering that scene in Podium (or another rendering engine) and you will see the same problem. AFAIK, the only renderer that supports rendering of distorted textures is Kerkythea.

-

HyperShot

Twilight

-

Ok. so far: Kerkythea and HyperShot.

-

Only Hypershot - the image from the Twilight is distorted. Hypershot converts everything to obj before rendering.

-

I saw Twilight was wrong. Though Whaat mentioned in his post whic I quoted that Kerkythea rendered correctly.

-

@aerilius said:

This example renders really well in Kerkythea, even with projected textures.

Actually, in that image, the distorted is not rendered correct.

-

Kerkythea does not render correctly same as Podium or Twilight.

I will try LUp later. -

Vue.

-

How does Vue work with SU?

-

Export to .3ds, open in Vue, hit render.

-

I see. So Vue doesn't read the Su data directly...

What does Hypershot do?

-

I have not used Podium or Twilight yet, but some days ago I read in the forum that they are based on the Kerkythea engine (???).

This example renders really well in Kerkythea, even with projected textures.

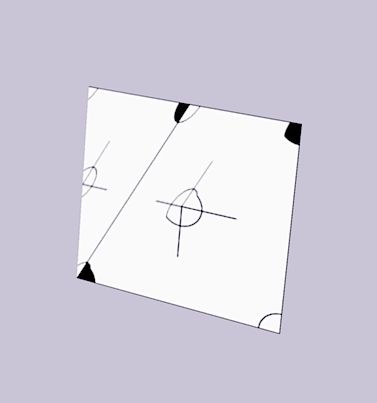

But I have experienced many times that distorted textures look strange and it's always an annoyance when everything is fine in SU and you have no idea what to correct:

Also some distorted textures can appear resized to ca. 10px width (then replace by original texture and it's ok). -

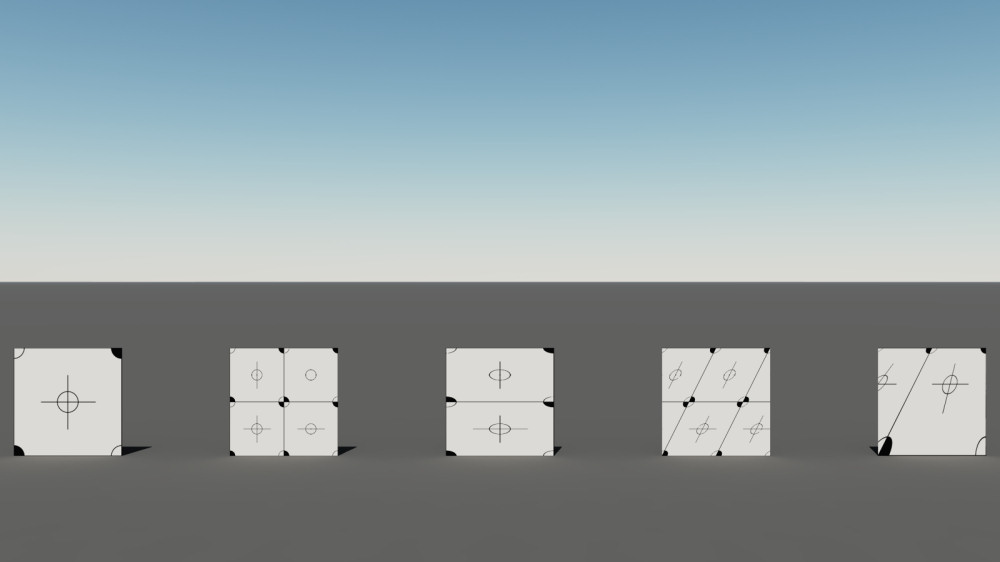

This is definitely a curious problem. Here's some thoughts on it.

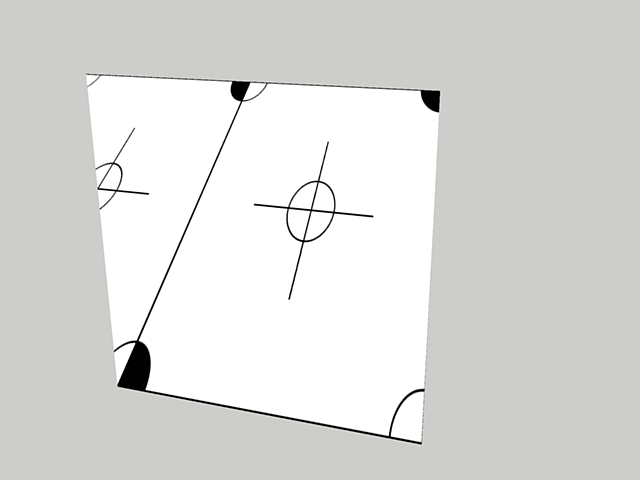

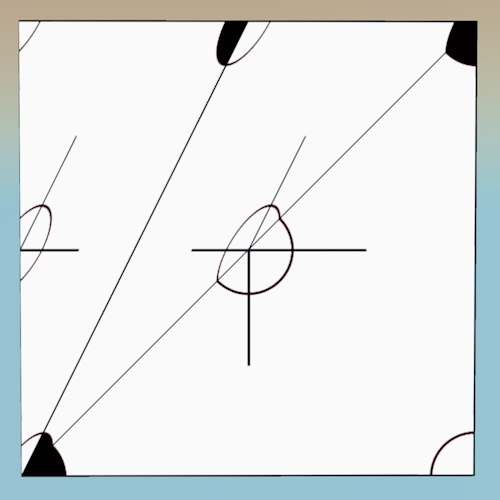

This is an image from Twilight with the edge line drawn where Twilight triangulates the quad. It's quite obvious that the distortion moves in different direction on either side of the split. But what's important to notice is that the exact corners are not 'wrong'. As I'm sure you know, texture is mapped via UV, and those UV coordinates exist only where there are geometric vertices. They are basically anchor points for the texture, and here, the anchor points are correct. What is wrong is how the texture is interpolated between anchor points.

I think the difference is that SU uses a kind of 'quad' interpolation for textures, using four anchor points (even when the face is 3 or 5 or whatever sides), whereas Twilight, and probably many other applications, interpolates textures using only 3 anchor points because the math is fast (which makes for faster renders!). If so, this makes it a fairly fundamental difference that will be difficult to correct for.

What surprises me the most is the SU exports, at least in .3ds, in such a way that it's interpreted correctly in Vue. Is it Vue, or is it the export process? I'd be curious to see an export to .3ds and rendered in another app, like Kerkythea.

Anyway, I could be mistaken about the whole thing, but it seems a possible scenario.

-

interesting....

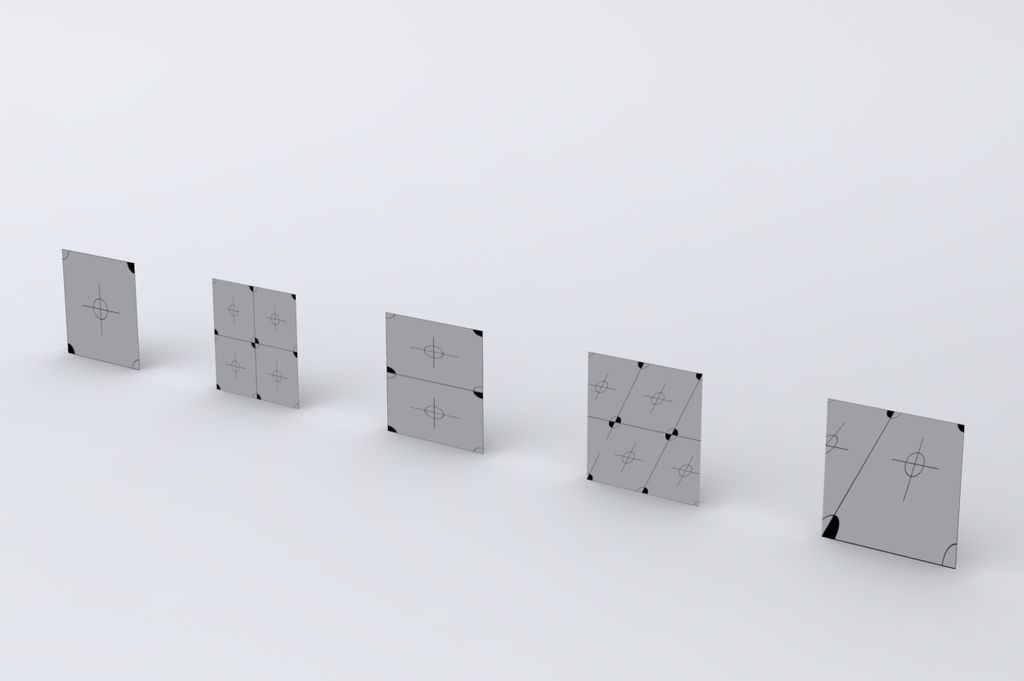

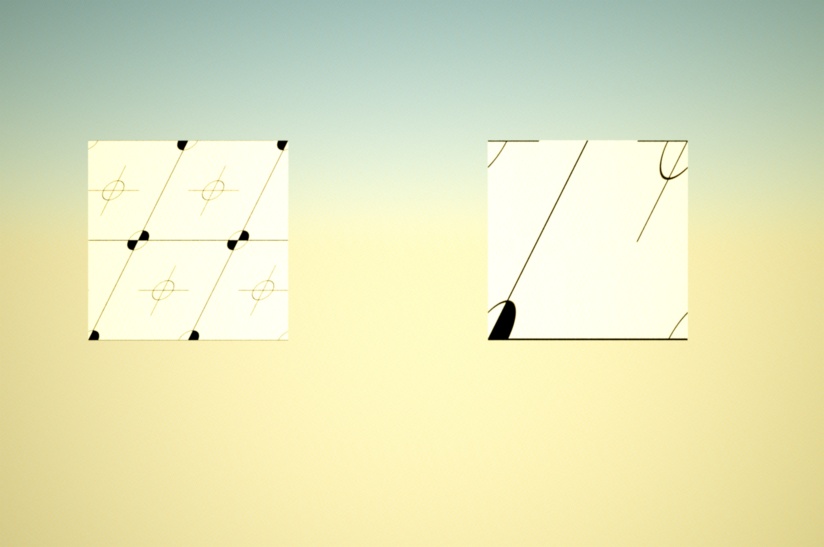

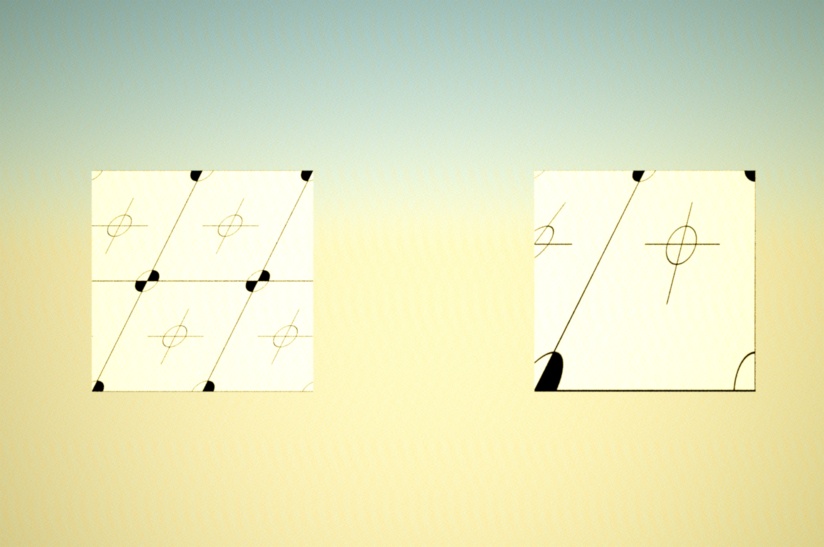

Indigo:

open skp and push render:

export as 3ds.. import 3ds and push render:

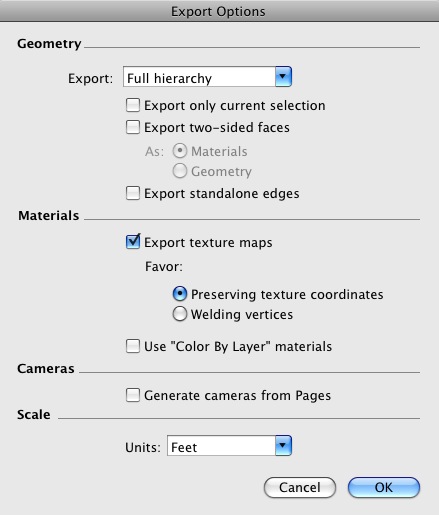

these are the settings i used for the 3ds export (i didn't try any other variations.. i set it like this first try and it worked)

-

Huh. The .3ds format only supports triangles (I think), so there is a way to represent the texture interpolation used by SU using only 3 UV coordinates (and the .3ds export knows it! )

Advertisement