Best GPU for Sketchup + Thea GPU Rendering?

-

@sketch3d.de said:

This is a DirectX benchmark... how should this say anything about sketchup performance?

http://www.passmark.com/products/pt.htm

http://www.passmark.com/products/pt_adv3d.htm@sketch3d.de said:

Quadros typically do not show any advantage for SU display output speed, i.e. burning money for nothing.

I'm not sure about this... I'm waiting for a clarification for years now. Sketchup is still openGL, so theoretically there should be an advantage. I really would like to see some fundamental tests of this, but a problem is that there is no proper benchmarking method. Maybe by playing an automated sequence of a bigger scene, but then the adaptive degradation would have to be disabled for proper results...

But yes, i think the best way to speed up sketchup is still a high single core CPU performance.

@solo said:

kids think my work machine is their next gaming rig...dream on)

-

@liam887 said:

For example two GTX 680's in SLI will out perform the Titan as a whole but i dont know if Thea supports that? Pete?

Running two cards will help, but forget connecting those to SLI, it wont help or actually may cause issues, as SLI is only for "game" graphics... it does not work with CUDA. Sure Thea can use both (darkroom), but for IR rendering it will use one dedicated to it and the other one will be used for running display, so you get good response when working with UI.

-

@unknownuser said:

for IR rendering it will use one dedicated to it

How does one decide this, through the Nvidia panel or Thea studio? what about in the Thea4SU plugin?

-

@solo said:

@unknownuser said:

for IR rendering it will use one dedicated to it

How does one decide this, through the Nvidia panel or Thea studio? what about in the Thea4SU plugin?

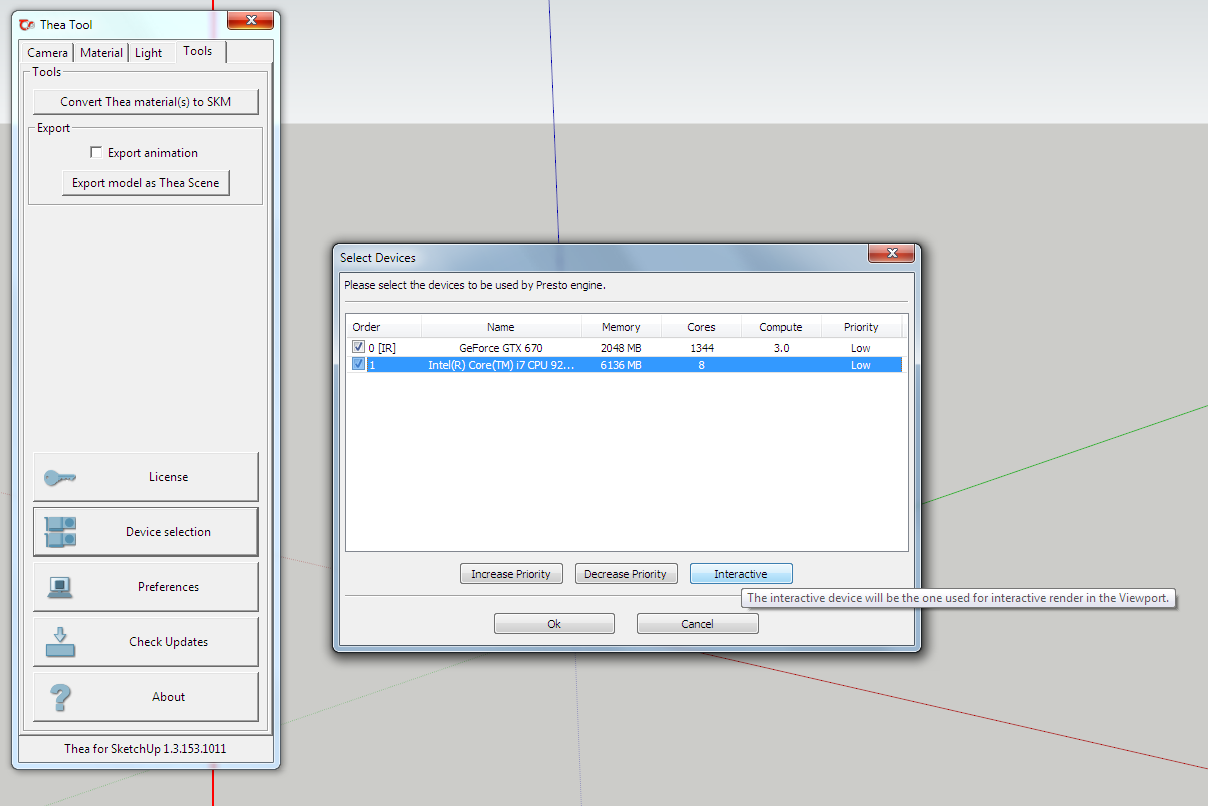

From nvidia control panel you can just enable/disable cuda for certain program/globally.

Thea and Thea4SU has devices panel that can be used to set priority, interactive and so... that is the place where you select what is interactive GPU.

-

@numerobis said:

This is a DirectX benchmark... how should this say anything about sketchup performance?

benching the trianglation throughput speed of GPUs by processing facetted 3D meshes surely provides some valuable results to derive from for the display output performance of SU even if MS Direct3D is used. The relations of the values between the respective models will probably be very comparable if the OGL stack would be used.

@numerobis said:

I'm not sure about this... I'm waiting for a clarification for years now. Sketchup is still openGL, so theoretically there should be an advantage.

SU is currently using the capabilities of the ol' OGL version 1.5, therefore the advanced capabilities of the Quadro FX drivers certified for high-end modelers as e.g. Catia, NX or Creo etc. are simply not required.

@numerobis said:

But yes, i think the best way to speed up sketchup is still a high single core CPU performance.

definitely, but for a fast system the components combined also need to be on a par, a fast graphics accelerator won't help if the main processor is the bottle neck and vice versa.

-

I currently have a 680 GTX 4gb and it is really fast. In talking with the guys over at Eon, I will be getting a Titan next.

-

@unknownuser said:

I currently have a 680 GTX 4gb and it is really fast. In talking with the guys over at Eon, I will be getting a Titan next.

Is this because you got some inside info?

We are talking LumenRT or is Vue going GPU?

-

@solo said:

Well, I just did it, placed an order on Amazon for a Titan, expedited to arrive Friday. Base camp may be out of reach as I spent $1000 on a video card (wife cannot understand these things, kids think my work machine is their next gaming rig...dream on)

Would like to see how a GTX 660ti and a Titan work together for rendering.

@Solo: Did the card arrive yet? If so, please let us know about the rendering experience with Thea's GPU render engine

-

@unknownuser said:

@Solo: Did the card arrive yet? If so, please let us know about the rendering experience with Thea's GPU render engine

It did indeed and it's installed.

I decided to take Giannis's advice (Thea developer) and keep my existing GTX 660ti 3GB and add the Titan in next PCI express slot, I kept my dual monitors connected to GTX 660 and left it as main GPU.

Now in Lumion and Thea I set it's priority in the Geforce settings panel for the Titan as top card so now when I work (render) the Titan takes on all the work and my other card is pretty free to still surf net, work in Photoshop etc.

I did a test using a Thea bench mark scene.

Opening file "C;\ProgramData\Thea Render/Scenes/Benchmark/Presto/MeccanoPlane/MeccanoPlane.scn.thea" Finished in 0 seconds! Device #0; GeForce GTX TITAN Building Environment... done. (0.305 seconds) - Polys; 503556, Objects; 91, Parametric; 0 - Moving; 0, Displaced; 0, Clipped; 0 - Instances; 0, Portals; 0 - Nodes; 158062, Leaves; 158063, Cache Level; Normal Building Environment... done. (0.315 seconds) - Polys; 503556, Objects; 91, Parametric; 0 - Moving; 0, Displaced; 0, Clipped; 0 - Instances; 0, Portals; 0 - Nodes; 40851, Leaves; 122554, Cache Level; Normal Device #1; GeForce GTX 660 Ti Device #2; Intel(R) Core(TM) i7 CPU 940 @ 2.93GHz Device #2; memory 4189/12278 Mb Device #0; memory 503/6144 Mb Device #1; memory 476/3072 Mb Device #1; 205 s/p Device #0; 538 s/p Device #2; 140 s/p Finished in 5 minutes and 2 seconds!That's s/p 883.

When beta testing (without Titan) I got s/p 330.

As you can see Thea used the Titan the 660 and the CPU.

So as you can see the Titan made my rendering almost 3x faster.

-

@solo said:

@unknownuser said:

@Solo: Did the card arrive yet? If so, please let us know about the rendering experience with Thea's GPU render engine

It did indeed and it's installed.

I decided to take Giannis's advice (Thea developer) and keep my existing GTX 660ti 3GB and add the Titan in next PCI express slot, I kept my dual monitors connected to GTX 660 and left it as main GPU.

Now in Lumion and Thea I set it's priority in the Geforce settings panel for the Titan as top card so now when I work (render) the Titan takes on all the work and my other card is pretty free to still surf net, work in Photoshop etc.

I did a test using a Thea bench mark scene.

That's s/p 883.

When beta testing (without Titan) I got s/p 330.

As you can see Thea used the Titan the 660 and the CPU.

So as you can see the Titan made my rendering almost 3x faster.

Thx for the update. Appreciated!

-

Next purchase Pete:

-

@liam887 said:

Next purchase Pete:

Firstly can you imagine the price?

I was looking at a dual Xeon system recently but decided to rather stick with my very tired 1st generation i7 (never did get the funds to take advantage of the deal I arranged early this year for community) I instead gathered up all my sheckles, borrowed a few from wife and bought a Titan card instead.

My thinking...

What do I need a computer for, well it's modeling, rendering and animation, so with Thea now being GPU and as an insider i know it will only get better and support all features very soon, so I really needed GPU power as animation pretty much is all GPU, and now I only render with GPU and with my new 6GB card even heavy scenes are a breeze, so why need more CPU? In fact the Titan card extends my machine another year...at least that is what I hope.

-

Indeed. Rumours is will be priced around 1k.

-

@liam887 said:

Next purchase Pete:

Just wondering: A second Titan would cost as much and increase render speed to a greater extent, wouldn't it?

-

@liverpudlian82 said:

@liam887 said:

Next purchase Pete:

Just wondering: A second Titan would cost as much and increase render speed to a greater extent, wouldn't it?

A second Titan would increase render speed BUT not add to the Ram as Thea only will use the ram from primary card, so IMO instead of getting a second Titan get a very fast GTX 7XX at less than half the price.

Remember the Titan is king only because it has 6GB ram and high clock speed, still not the best, the best is this: http://www.newegg.com/Product/Product.aspx?Item=N82E16814133494

IF YOU GOT $$$$$

-

@solo said:

I was looking at a dual Xeon system recently but decided to rather stick with my very tired 1st generation i7 (never did get the funds to take advantage of the deal I arranged early this year for community) I instead gathered up all my sheckles, borrowed a few from wife and bought a Titan card instead.

I'm interested to hear this as I'm in the same boat. I have an early i7 2600K and I find it lagging on lots of projects. But in this year alone I bought a license for Rhino, a license for V-Ray for Rhino, upgraded my V-Ray for SketchUp and upgraded my Modo license. I managed to get good prices on all of them (it is worth it to wait for deals) but it adds up to a lot of dough. I figure a new computer is going to set me back at least $2500. If I could get a speed boost for another year by buying a Titan, that would be more realistic considering the carnage that all that software did to my bank account.

-

@arail1 said:

If I could get a speed boost for another year by buying a Titan, that would be more realistic considering the carnage that all that software did to my bank account.

Next generation of nvidia cards (Maxwell) is announced for feb/march which should feature unified memory architecture to use the system RAM. So i would wait at least for this.

For the rest of the system... if you can wait a bit longer, Q3 2013 will bring Haswell-E with 8 cores and DDR4. -

@matt.gordon320 said:

I can say that I've had a very good experience with a Quadro card. My machine is as follows:

BOXX 4920 Xtreme

Hex-core i7 @ 4.5GHz, 32GB ram, Quadro 4000.Granted, I know a lot of the performance is due to the processor speeds, but the graphics card has always been more than enough for anything I've ever thrown at it. I've worked on an model detailed enough to be used for construction details and was in excess of a million faces, and it was faster than most 20 mb models on my work machine. I would heartily recommend the Quadros. They're pricey, but totally worth it.

Sent from my XT1080 using Tapatalk

GREETING MY FRIENDS::

I just want to share my BAD EXPERIENCE in SKETCHUP with a Geforce GTX 780M...

Unfortunately, I think I made a bad choice in pick up one Geforce for my work. Despite of its great fastness and power horse of a GTX 780M, this card "couldn`t" handle my 3 million edges 3D work in SKETCHUP... I am pissed of about it..

a little bit of my story:

recently I bought a new laptop so I can replace the old one and get done my ultimate Architecture JOB... This JOB is a very complex 3D, with many, many layer, drawing in STELL FRAMES and ELIPTICAL SHPAPES, plus TRELLIS and so on...

After I check the GPU market, and after many many hours research for a nice GPU card that handle this job, and most of all, that handle 3D application softwares like PHOTOSHOP CS5, AUTOCAD 2010, SKETCHUP 2013, RHINO 5, ARCHICAD, ARTLANTIS 4, ILLUSTRATOR, OFFICE, and more 1 or 2, etc.. I think I made a BIG BAD choice by pick up a GAMING CARD for work. I think mostly because of its big specs, compare GTX and QUADRO, like memory bandwith, cudas, directx, etc, plus it`s mature drivers that are more COMPATIBLE with numerous softwares... So I pic a GTX 780M... a really BAD BAD CHOICE here.!!!!

my laptop is a:

CLEVO P150SM (SAGER in USA)

15,5" Full HD 95% High Color Gamut

i7 4710MQ 2,5Ghz - 3,5Ghz

GTX 780M

16Gb RAM G-Skills 1600 CL9

SSD Samsung 840 Pro 256GbAll the hardware components have a really nice configuration, and they are very, very, fast in calculate multitasking, open and close applications, transfer, open and close apps... Although I was surprising by the BAD SMOOTHNESS in VIEWPORT WORKFLOW of this card...

Has most of us know, all 3D applications require a SMOOTH REAL TIME VIEWPORT, so we can have the SHADER, SHADOWS, TRANSPARENCES, and ALL LAYER turned ON, for get that work done...But its getting impossible with this card... And I have to say that I bought bought this laptop about 5 days ago.

I have to say, I am BIG DISAPOINTED with the WORST and BAD PERFORMANCE of the GTX 780M on that matter. It simply not handle the 3D ROTATE, or the SHADOWS turn on when I need to ROTATE... I am really pieced of and very irritated about this.

I have contact Mrs Bob from RHINO McNeel FORUM, to ask him what GPU and Hardware he recommended to use RHINO 5 in smooth viewport workflow... And he say: "that my configuration is enough... He had a GT750M and work like charm..."

...So I start to think about WHAT IT COULD BE, for my GTX 780M wont work properly...?????

I have to say, that I already test the card into 2 benchmarks... SPECviewperf 12 and SPECwpc... I have to admit that results, from SPECviewperf 12 was a disaster... Some parts of benchmark, like CATIA, ENERGY, MEDICAL are really fine. But SIEMENS and some others have a very low values like 2,5 min - 4,3max (very low results if we compare this GTX 780M with a K1000M or K2000M)..

. Does the QUADRO K3100M can handle my job?

. Does Quadro K3100M card are a "must have" for those u just work, and don't want to play games at all ???

. Does QUADRO cards are more reliable for any 3D Sofware that use OpenGL?

. Can I get a smooth 3D rotate in VIEWPORTS, for example in SKETCHUP, or RHINO? (two apps that use OpenGL)??

. Should I change GTX 780M for a QUADRO K3100M (the maximum Quadro GPU that my laptop support)???

. Does QUADRO K3100M still do the job in other apps? like AUTOCAD 2010(12), PHOTOSHOP, ARTLANTIS, V-RAY, or other?...Or if I choose that QUADRO card, I only get 100% performance if I work on certification drivers?

because, QUADRO cards seems to not have great updates of its DRIVERS.. It seams they only work in some 3D app.. Like solidworks, maya etc...

....I don't need speed... I just need to complete my job, fast and smooth rotate in real viewport...

So if one could tell me what GPU CARD should I change for this, so I can a nice workflow in 3D apps.. or specially, in that soft wares that I mentioned just above.

-

let me check something with you

download some software that measures GPU usage. and look how much its using when you are lagging orbiting a model. You probably is using very few % of that and your processor is making all the job.

anyway 3 million edges its a LOT.

-

Architex, as far as I know GTX 780M should be just fine with SU. But there might be some other issues... nvidia uses Optimus technology that switches between discrete GPU and integrated GPU. Thanks to it, there is a possibility that GTX780M is not actually used with SU.

Get Nvidia tools from

https://www.dell.com/support/troubleshooting/us/en/04/KCS/KcsArticles/ArticleView?c=us%26amp;l=en%26amp;s=bsd%26amp;docid=439417

These should give some idea what GPU, integrated or discrete is in use and with what program. NOTE: They seems to work even if you have no DELL system. But it would not hurt to search if your laptop provider have own nvidia optimus test tool set.Alternatively there may be some GPU malware lurking in your 780M, mining bitcoins and using GPU cycles. These are relatively common.

Advertisement