Best GPU for Sketchup + Thea GPU Rendering?

-

Hi there,

my high-poly SU models (50.000.000+ edges) won't orbit as smoothly as I'd like them to.

So far I'm using 16GB RAM, an Intel Core i7-3770 CPU, and an Nvidia GeForce GTX570 GPU.

I wonder if there is a GPU, which would signficantly increase my machine's performance in SU and the rendering times in Thea Render at the same time.

Thea Render recently posted this on their forums: http://www.thearender.com/forum/viewtopic.php?f=17%26amp;t=13779

This seems to suggest that a GeForce GTX Titan would do the trick at rendering, but I'd only consider getting one if also the performance in SU improves massively (rendering time is not that crucial to me).

I'd be happy to hear about your experience on Geforce GTX Titan vs. Quadro cards for SU specifically

Thanks!

Markus -

I can say that I've had a very good experience with a Quadro card. My machine is as follows:

BOXX 4920 Xtreme

Hex-core i7 @ 4.5GHz, 32GB ram, Quadro 4000.Granted, I know a lot of the performance is due to the processor speeds, but the graphics card has always been more than enough for anything I've ever thrown at it. I've worked on an model detailed enough to be used for construction details and was in excess of a million faces, and it was faster than most 20 mb models on my work machine. I would heartily recommend the Quadros. They're pricey, but totally worth it.

Sent from my XT1080 using Tapatalk

-

the GPU speed shouldn't be overestimated for SU, the performance of the CPU (single core) is the determining factor.

Quadros typically do not show any advantage for SU display output speed, i.e. burning money for nothing.

Norbert

-

You wont really notice much difference in SU depending on your video card as it comes from the CPU. While the TITAN is great for gaming you can get other cards that do nearly as much damage when redering for substantially cheaper.

Also did you mean 50,000 polygons or 50,000,000!

-

@liam887 said:

You wont really notice much difference in SU depending on your video card as it comes from the CPU. While the TITAN is great for gaming you can get other cards that do nearly as much damage when redering for substantially cheaper.

Also did you mean 50,000 polygons or 50,000,000!

Gotta disagree, nothing beats the Titan in rendering, the 6GB Vram is the clincher.

-

@unknownuser said:

You have too much money Pete.

For example two GTX 680's in SLI will out perform the Titan as a whole but i dont know if Thea supports that? Pete?

I wish, I tried selling my kids to get a Titan card, but no takers I only have a 660ti 3gb.

The thing is you can have as many cards as you want for rendering and they will combine and speed up your renders but it will only use the Vram from one card so you are limited there, so the Titan which is a damn fast card also has 6gb of ram, so for the money it's the best option.

-

@solo said:

@liam887 said:

You wont really notice much difference in SU depending on your video card as it comes from the CPU. While the TITAN is great for gaming you can get other cards that do nearly as much damage when redering for substantially cheaper.

Also did you mean 50,000 polygons or 50,000,000!

Gotta disagree, nothing beats the Titan in rendering, the 6GB Vram is the clincher.

You have too much money Pete.

For example two GTX 680's in SLI will out perform the Titan as a whole but i dont know if Thea supports that? Pete?

EDIT

Saying that I just googled it and you can buy one for only £815 (probably cheaper if you look harder). I thought they where over £1k.

-

@solo said:

@unknownuser said:

You have too much money Pete.

For example two GTX 680's in SLI will out perform the Titan as a whole but i dont know if Thea supports that? Pete?

I wish, I tried selling my kids to get a Titan card, but no takers I only have a 660ti 3gb.

The thing is you can have as many cards as you want for rendering and they will combine and speed up your renders but it will only use the Vram from one card so you are limited there, so the Titan which is a damn fast card also has 6gb of ram, so for the money it's the best option.

Ahh I see yes. In the same boat I would like them but not going to happen any time soon...

-

-

@liverpudlian82 said:

Thanks for your opinions.

So understand that CPU is the bottle neck in SU. Problem is: it does not get much faster than what I already have

@liam: 50.000.000

@solo: Love your avatar

One thing I have used in SU to get around this problem is create a model in multiple documents and then assemble in the render engine. Or only compile the model when you want to output for a render. I have special tags in my geometry so the bits piece together like lego. I can then delete that geometry in Thea if that makes sense?

And if you cant be bothered to do that you can use the layers panel. I have managed some fairly big models in the multi million polys on my laptop.

-

Well, I just did it, placed an order on Amazon for a Titan, expedited to arrive Friday. Base camp may be out of reach as I spent $1000 on a video card (wife cannot understand these things, kids think my work machine is their next gaming rig...dream on)

Would like to see how a GTX 660ti and a Titan work together for rendering.

-

@liverpudlian82 said:

So understand that CPU is the bottle neck in SU. Problem is: it does not get much faster than what I already have...

the fastet i7-3770K@3.50GHz reaches 9.577 Passmark points, the i7-4930K@3.40GHz of the recent 'Haswell' series 13.624 Passmark points ~ 42% better number crunching.

combined with a nice SSD (-> Samsung 840 Pro) this may give a neat boost for SU.

[update]

oops, the 4930K is a six core CPU and thus won't help much for SU besides over-clocked... but maybe helpful for rendering though.

[/update] -

@sketch3d.de said:

This is a DirectX benchmark... how should this say anything about sketchup performance?

http://www.passmark.com/products/pt.htm

http://www.passmark.com/products/pt_adv3d.htm@sketch3d.de said:

Quadros typically do not show any advantage for SU display output speed, i.e. burning money for nothing.

I'm not sure about this... I'm waiting for a clarification for years now. Sketchup is still openGL, so theoretically there should be an advantage. I really would like to see some fundamental tests of this, but a problem is that there is no proper benchmarking method. Maybe by playing an automated sequence of a bigger scene, but then the adaptive degradation would have to be disabled for proper results...

But yes, i think the best way to speed up sketchup is still a high single core CPU performance.

@solo said:

kids think my work machine is their next gaming rig...dream on)

-

@liam887 said:

For example two GTX 680's in SLI will out perform the Titan as a whole but i dont know if Thea supports that? Pete?

Running two cards will help, but forget connecting those to SLI, it wont help or actually may cause issues, as SLI is only for "game" graphics... it does not work with CUDA. Sure Thea can use both (darkroom), but for IR rendering it will use one dedicated to it and the other one will be used for running display, so you get good response when working with UI.

-

@unknownuser said:

for IR rendering it will use one dedicated to it

How does one decide this, through the Nvidia panel or Thea studio? what about in the Thea4SU plugin?

-

@solo said:

@unknownuser said:

for IR rendering it will use one dedicated to it

How does one decide this, through the Nvidia panel or Thea studio? what about in the Thea4SU plugin?

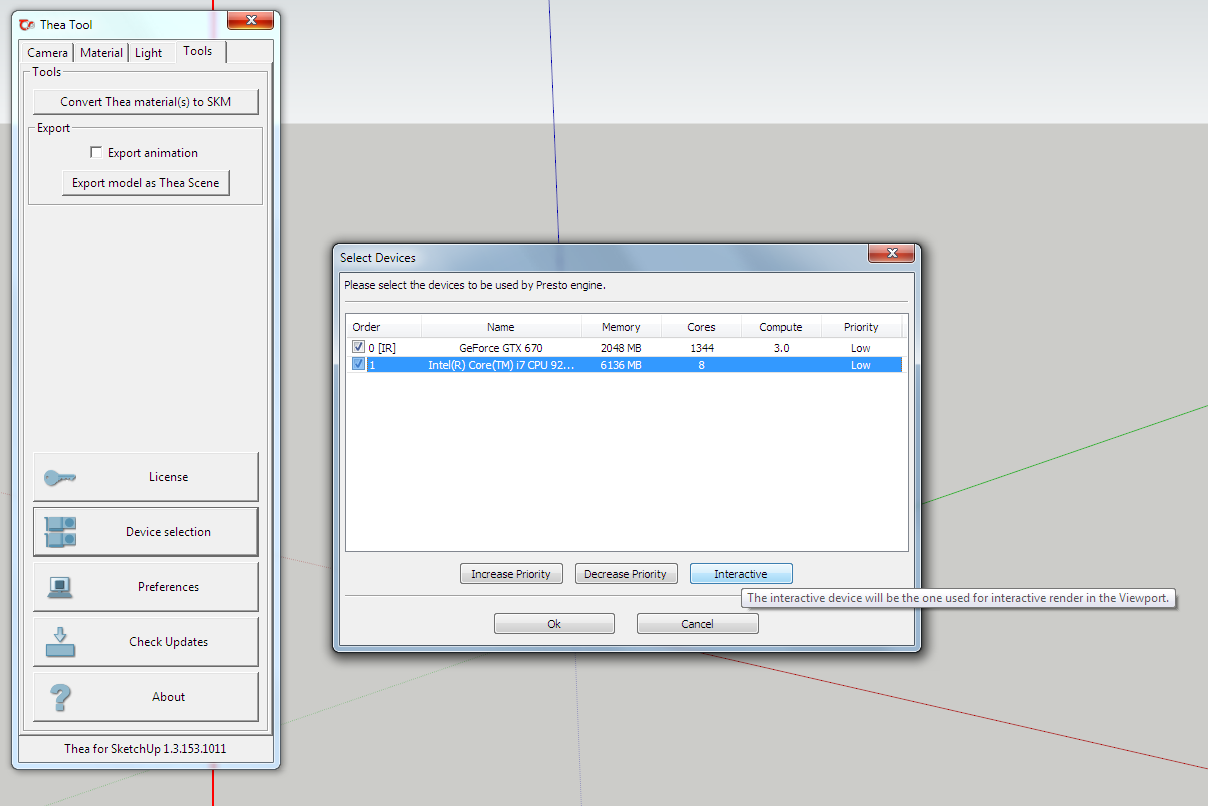

From nvidia control panel you can just enable/disable cuda for certain program/globally.

Thea and Thea4SU has devices panel that can be used to set priority, interactive and so... that is the place where you select what is interactive GPU.

-

@numerobis said:

This is a DirectX benchmark... how should this say anything about sketchup performance?

benching the trianglation throughput speed of GPUs by processing facetted 3D meshes surely provides some valuable results to derive from for the display output performance of SU even if MS Direct3D is used. The relations of the values between the respective models will probably be very comparable if the OGL stack would be used.

@numerobis said:

I'm not sure about this... I'm waiting for a clarification for years now. Sketchup is still openGL, so theoretically there should be an advantage.

SU is currently using the capabilities of the ol' OGL version 1.5, therefore the advanced capabilities of the Quadro FX drivers certified for high-end modelers as e.g. Catia, NX or Creo etc. are simply not required.

@numerobis said:

But yes, i think the best way to speed up sketchup is still a high single core CPU performance.

definitely, but for a fast system the components combined also need to be on a par, a fast graphics accelerator won't help if the main processor is the bottle neck and vice versa.

-

I currently have a 680 GTX 4gb and it is really fast. In talking with the guys over at Eon, I will be getting a Titan next.

-

@unknownuser said:

I currently have a 680 GTX 4gb and it is really fast. In talking with the guys over at Eon, I will be getting a Titan next.

Is this because you got some inside info?

We are talking LumenRT or is Vue going GPU?

-

@solo said:

Well, I just did it, placed an order on Amazon for a Titan, expedited to arrive Friday. Base camp may be out of reach as I spent $1000 on a video card (wife cannot understand these things, kids think my work machine is their next gaming rig...dream on)

Would like to see how a GTX 660ti and a Titan work together for rendering.

@Solo: Did the card arrive yet? If so, please let us know about the rendering experience with Thea's GPU render engine

Advertisement