Any SU render engines that renders distorted textures?

-

Aaah!!! That's why it asks for a TW. Duh!

That fills in a great hole in the API doc.Thanks Tomaz!

-

Here's a simple test I did. (doesn't take into account nested groups etc)

but it's a rough ruby version of Make Unique.

-

Just a quick word as to what you're seeing.

The texture coordinates in SU are mostly 2d but there is support for projected textures in which the 3rd element of the texture coordinate is a projection. ie by dividing through by the 3 element you get a 2d texture coordinate.

You can get a ok approximation by doing the projection at the vertices and then interpolating across the polygon in 2d (this is what LightUp does), or you can interpolate the 3-element texture coordinate and do the divide at each pixel which is slower but you'll get the right answer. Not sure there are many renderers that do that.

Adam

-

Oh, and the corollary of this is that if you subdivide the faces into smaller pieces, you'll get a progressively more accurate rendering. So try cutting the distorted quad into 16 quads.

Adam

-

@adamb said:

You can get a ok approximation by doing the projection at the vertices and then interpolating across the polygon in 2d (this is what LightUp does), or you can interpolate the 3-element texture coordinate and do the divide at each pixel which is slower but you'll get the right answer. Not sure there are many renderers that do that.

SU is using OpenGL. Does it mean that SU, for its own 'rendering', does same interpolation and sends the 'unique' texture to OpenGL?

-

@unknownuser said:

SU is using OpenGL. Does it mean that SU, for its own 'rendering', does same interpolation and sends the 'unique' texture to OpenGL?

I suspect so - it is a lot faster to create the distorted image as a bitmap, and then send it to OpenGL - rather than starting to sub-divide faces.

Also, that would explain why SketchUp makes to pre-distorted image available to 3DS output and to us.

-

@unknownuser said:

@adamb said:

You can get a ok approximation by doing the projection at the vertices and then interpolating across the polygon in 2d (this is what LightUp does), or you can interpolate the 3-element texture coordinate and do the divide at each pixel which is slower but you'll get the right answer. Not sure there are many renderers that do that.

SU is using OpenGL. Does it mean that SU, for its own 'rendering', does same interpolation and sends the 'unique' texture to OpenGL?

No, it means SU sends 3-component texture coordinates to OpenGL and allows OpenGL to interpolate and divide at each pixel.

But as Al says, given you can 'bake in' your projection to a unique texture once your happy, its not a big deal.

Adam

-

@adamb said:

No, it means SU sends 3-component texture coordinates to OpenGL and allows OpenGL to interpolate and divide at each pixel.

Just as Adam says, OpenGL provides methods not just for 2 coordinates (UV), but 3 and even 4. glTexCoord3f, glTexCoord4f

-

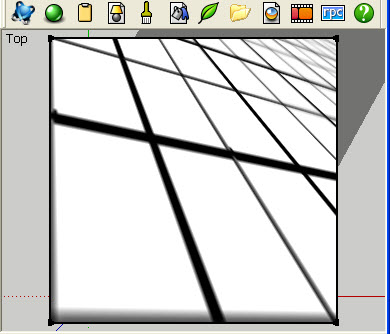

I'm curious: for those render engines that renders distorted textures. What's the result if you add a bump map, like the one attached. Exaggerated bump so it's clearly visible.

-

@thomthom said:

I'm curious: for those render engines that renders distorted textures. What's the result if you add a bump map, like the one attached. Exaggerated bump so it's clearly visible.

"True" bump maps, where you assign the bump as a second texture, do not work, because SketchUp gives you a pre-distorted texture, which then works with UVs of 0,0, and 1,1, to use for the main texture. But SketchUp does not give you information on how to distort the second texture.

Here the carpet texture is distorted properly, but the bump map is not distorted.

"auto-bump" where a single texture is used for the color and for the bump map, does work, because the bump map is distorted as well.

Here the bump map effect matches the distortion of the image in SketchUp.

(The disgtorted SketchUp image was used as the bump map.)

Distorted SketchUp Material

This could be solved for multiple texture bump maps - but you would have to let SketchUp distort the main texture and then let SketchUp distort the bump map texture as well.

-

@al hart said:

"True" bump maps, where you assign the bump as a second texture, do not work, because SketchUp gives you a pre-distorted texture, which then works with UVs of 0,0, and 1,1, to use for the main texture. But SketchUp does not give you information on how to distort the second texture.

That's what I suspected. Which is a problem.

-

@thomthom said:

That's what I suspected. Which is a problem.

If you are an IRender nXt user, we can probably write a routine to distort the bump maps for you.

If not you can still do it in Ruby. Write a ruby to use texture writer to replace the diffuse SketchUp texture with the bbmp map texture, then use texture writer to save the distorted bump map, then replace te original texture. Ditto for any specular texture.

-

But surely that will lead to crazy parsing times if you load and map a texture for each material layer for each distorted face in the model..?

-

@thomthom said:

But surely that will lead to crazy parsing times if you load and map a texture for each material layer for each distorted face in the model..?

Probably not so bad.

But if it does start to take too long, you could place the "Bump" versions of the faces in a different layer, which is turned off - and extract the faces when needed. This would save the time to load the material into SketchUp.

You can also use "Make Unique" on a distorted face. Then SketchUp replaces it with the distorted image mapped 1 to 1. If you did this with both the material and the bump map, you would have the distorted versions of both. We have considered placing the bump map on the reverse side of the face, so it would be easy to find.

-

Here is my guess what the Q value could be used for. Unfortunately I don't have time to test my hypothesis.

-

@mhd said:

Here is my guess what the Q value could be used for. Unfortunately I don't have time to test my hypothesis.

I don't really understand what I'm looking at here.

-

What I'm trying to say is this:

If you add Q*(Face Normal) to each of your points you get a new face.

If you align your texture to this new face but assign it to the old face it should be distorted.PS: sorry for my bad English... I'm not a native speaker.

Advertisement