Is this guy serious?!

-

@steved said:

@ccbiggs said:

Steve, it does not matter if it was a gun or knife. Supposedly all those countries have 100% gun bans or darn close to it.

Just shows if the info is any good that banning/gun control for the most part does not do much good.

No matter how functional or nonfunctional the government.

Once again I am not saying anything is fact or truth. Just something I thought was interesting info when I seen it.It does matter if it is a gun or a knife! If the school massacre had been done with a knife wielding murderer the deaths would have been much less, and more manageable by the staff, but it was carried out with an assault weapon ......What do you not get about that

Really exactly do you not get about that

'Bump' gotcha Jeff

-

-

@ccbiggs said:

This information is pretty much accurate. However, it is misleading. The UK doesn't have guns and they only had 1.2 murders per 100,000. Australia doesn't have guns and they had 1.0, China probably doesn't have guns and they had 1.0, Canada had 1.6 and Monaco had 0. Monaco probably doesn't have a 100,000 people? None of these countries are on the list, at least I didn't see them. At any rate, the list was designed to be misleading.

From the World Health Organization:

The latest Murder Statistics for the world:

Murders per 100,000 citizens

Honduras 91.6El Salvador 69.2

Cote d'lvoire 56.9

Jamaica 52.2

enezuela 45.1

Belize 41.4

US Virgin Islands 39.2

Guatemala 38.5

Saint Kits and Nevis 38.2

Zambia 38.0

Uganda 36.3

Malawi 36.0

Lesotho 35.2

Trinidad and Tobago 35.2

Colombia 33.4

South Africa 31.8

Congo 30.8

Central African Republic 29.3

Bahamas 27.4

Puerto Rico 26.2

Saint Lucia 25.2

Dominican Republic 25.0

Tanzania 24.5

Sudan 24.2

Saint Vincent and the Grenadines 22.9

Ethiopia 22.5

Guinea 22.5

Dominica 22.1

Burundi 21.7

Democratic Republic of the Congo 21.7

Panama 21.6

Brazil 21.0

Equatorial Guinea 20.7

Guinea-Bissau 20.2

Kenya 20.1

Kyrgyzstan 20.1

Cameroon 19.7

Montserrat 19.7

Greenland 19.2

Angola 19.0

Guyana 18.6

Burkina Faso 18.0

Eritrea 17.8

Namibia 17.2

Rwanda 17.1

Mexico 16.9

Chad 15.8

Ghana 15.7

Ecuador 15.2

North Korea 15.2

Benin 15.1

Sierra Leone 14.9

Mauritania 14.7

Botswana 14.5

Zimbabwe 14.3

Gabon 13.8

Nicaragua 13.6

French Guiana 13.3

Papua New Guinea 13.0

Swaziland 12.9

Bermuda 12.3

Comoros 12.2

Nigeria 12.2

Cape Verde 11.6

Grenada 11.5

Paraguay 11.5

Barbados 11.3

Togo 10.9

Gambia 10.8

Peru 10.8

Myanmar 10.2

Russia 10.2

Liberia 10.1

Costa Rica 10.0

Nauru 9.8

Bolivia 8.9

Mozambique 8.8

Kazakhstan 8.8

Senegal 8.7

Turks and Caicos Islands 8.7

Mongolia 8.7

British Virgin Islands 8.6

Cayman Islands 8.4

Seychelles 8.3

Madagascar 8.1

Indonesia 8.1

Mali 8.0

Pakistan 7.8

Moldova 7.5

Kiribati 7.3

Guadeloupe 7.0

Haiti 6.9

Timor-Leste 6.9

Anguilla 6.8

Antigua and Barbuda 6.8

Lithuania 6.6

Uruguay 5.9

Philippines 5.4

Ukraine 5.2

Estonia 5.2

Cuba 5.0

Belarus 4.9

Thailand 4.8

Suriname 4.6

Laos 4.6

Georgia 4.3

Martinique 4.2

And

United States 4.2

NOTE: ALL of the countries above America have 100% gun bans

or are close to it.Most of those countries above are very poor, as far as developed nations go you cant really compare the US to Haiti Sudan and Congo! And most of those countries do not have gun bans - not even close. Half of them probably dont even have a functioning government at least five are dictatorships. Last year I went to Estonia and a few guys in my group went on a tour organised by the hotel to shoot shotguns, pistols, AK47 (automatic).

http://www.topguntours.co.uk/shooting-tallinn..html

This is the blurb:"Shoot 9 police type guns including some specially kitted out with all the latest tactical gizmos like laser sights, built in flashlights, and high capacity magazines. You will get to shoot the American M16, the Russian AK47, the Glock police pistol, a genuine "Dirty Harry" .44 Magnum, and the awesomely powerful Pump-action shotgun. Highly recommended!"

I understand the notion for rifles (low caliber with small box magazine or bolt action) for recreation and hunting but fully automatic and semi automatic military style rifles need to go. There is no logical excuse to own one in any sense.

Getting guns off the streets in the US would be very difficult but the first logical step is to start banning high powered weapons. Then massive investment would be needed to have some sort of collection enforcement, probably also have to reimburse owners etc the list goes on.

I dont know how the problem could be solved easily but I know its stupid to keep going the way it is. This isn't aimed at you btw biggs just in general.

-

Steve, do you not read? Or do just have trouble retaining what you do?

When speaking in context to that list which is what we/I was discussing. There is no difference between a gun or a knife. When guns are banned in these countries and a list shows that homicide's are not zero, then my take on it is the gun ban/control did nothing. Except make it safer for the criminals to be criminals.

When or where do you see that I said a single thing about the school.

If you want to follow up on what I post, read what I posted.

And do not put words in my mouth!

There is nothing "I do not get about" what could have been. And the difference between that psycho having a rifle or knife that day in that school. Yes it more than likely would have been better for most everyone in the school that day if he only had a knife.

But on the other hand he could have had nothing but the clothes on his back, stole a bus and drove the damn thing straight through the place and done even more damage. -

@ccbiggs said:

Steve, do you not read? Or do just have trouble retaining what you do?

When speaking in context to that list which is what we/I was discussing. There is no difference between a gun or a knife. When guns are banned in these countries and a list shows that homicide's are not zero, then my take on it is the gun ban/control did nothing. Except make it safer for the criminals to be criminals.

When or where do you see that I said a single thing about the school.

If you want to follow up on what I post, read what I posted.

And do not put words in my mouth!

There is nothing "I do not get about" what could have been. And the difference between that psycho having a rifle or knife that day in that school. Yes it more than likely would have been better for most everyone in the school that day if he only had a knife.

But on the other hand he could have had nothing but the clothes on his back, stole a bus and drove the damn thing straight through the place and done even more damage.Well this whole thread was created as a response to the school massacre or did i miss something?

-

What words did I put in your mouth?

-

Ok maybe not putting words in my mouth. But apparently you think you are cute though, using what I posted, taking it totally out of context and attaching it to something else. You was talking about that list, I was talking about that list. Then the next post from you"I do not get something about the school"

Just to bust my chops or to try and get a rise out of me.

Play dumb if you like.

But I am done with it for awhile.

Like I said I was just trying to get people to look at the big picture of "gun control/banning"

But I suppose there is no point trying to get you or others who live where they have already taken your gun rights, to look at anything any different.

Have a good day fellas.

Talk at you all later. -

@unknownuser said:

gun rights

This discussion is really hard to understand from an outside perspective.

How can citizens have a right for a tool to kill? (I would have expected the opposite, people would need to gain a license for guns, like a driver license and proof their skills and responsibility.)

How can you compare politically unstable (and some anarchic) countries with your own? The US is not a banana republic! In Europe we have been living well for a long time with a very low amount of weapons (I don't know anyone who has one). I don't miss anything (that's what makes this all hard to comprehend).

Whether there is official gun control, gun confiscation, legally "encouraged" gun disposal or voluntary gun disposal... wouldn't matter if people hadn't bought the guns in the first place. If it works voluntary, that's nice!

-

ccbiggs/cory, let me show you how your post reads, I'll chop a section out of the middle just to make it shorter.

@ccbiggs said:

Just part of an email that ended up in my inbox.

EMAIL

**A LITTLE GUN HISTORYIn 1929, the Soviet Union established gun control. From 1929 to 1953, about 20 million dissidents, unable to defend themselves, were rounded up and exterminated.

.

.

.

.

.

Defenseless people rounded up and exterminated in the 20th Century because of gun control: 56 million.

------------------------------**

Your Conclusion%(#4040FF)[Guns in the hands of honest citizens save lives and property and, yes, gun-control laws adversely affect only the law-abiding citizens.

Take note my fellow Americans, before it's too late!

The next time someone talks in favor of gun control, please remind them of this history lesson.

With guns, we are 'citizens'. Without them, we are 'subjects'.

During WWII the Japanese decided not to invade America because they knew most Americans were ARMED!]

I'm aware now that this isn't what you meant, but that is how it comes across at first reading, so you might be able to see how it could lead to some strong reactions and some confusion over the next posts.

-

@box said:

ccbiggs/cory, let me show you how your post reads, I'll chop a section out of the middle just to make it shorter.

@ccbiggs said:

Just part of an email that ended up in my inbox.

EMAIL

**A LITTLE GUN HISTORYIn 1929, the Soviet Union established gun control. From 1929 to 1953, about 20 million dissidents, unable to defend themselves, were rounded up and exterminated.

.

.

.

.

.

Defenseless people rounded up and exterminated in the 20th Century because of gun control: 56 million.

------------------------------**

Your Conclusion%(#4040FF)[Guns in the hands of honest citizens save lives and property and, yes, gun-control laws adversely affect only the law-abiding citizens.

Take note my fellow Americans, before it's too late!

The next time someone talks in favor of gun control, please remind them of this history lesson.

With guns, we are 'citizens'. Without them, we are 'subjects'.

During WWII the Japanese decided not to invade America because they knew most Americans were ARMED!]

I'm aware now that this isn't what you meant, but that is how it comes across at first reading, so you might be able to see how it could lead to some strong reactions and some confusion over the next posts.

I think he was just quoting an email not actually writing that himself - just to clarify.

-

Yes I got that, was just pointing out that it could be confused.

-

Here is a very interesting article, one I have read many times and still I find myself captive to myself on many issues.

I know it's long, but worth the read.

How facts Backfire

It’s one of the great assumptions underlying modern democracy that an informed citizenry is preferable to an uninformed one. “Whenever the people are well-informed, they can be trusted with their own government,” Thomas Jefferson wrote in 1789. This notion, carried down through the years, underlies everything from humble political pamphlets to presidential debates to the very notion of a free press. Mankind may be crooked timber, as Kant put it, uniquely susceptible to ignorance and misinformation, but it’s an article of faith that knowledge is the best remedy. If people are furnished with the facts, they will be clearer thinkers and better citizens. If they are ignorant, facts will enlighten them. If they are mistaken, facts will set them straight.

In the end, truth will out. Won’t it?

Maybe not. Recently, a few political scientists have begun to discover a human tendency deeply discouraging to anyone with faith in the power of information. It’s this: Facts don’t necessarily have the power to change our minds. In fact, quite the opposite. In a series of studies in 2005 and 2006, researchers at the University of Michigan found that when misinformed people, particularly political partisans, were exposed to corrected facts in news stories, they rarely changed their minds. In fact, they often became even more strongly set in their beliefs. Facts, they found, were not curing misinformation. Like an underpowered antibiotic, facts could actually make misinformation even stronger.

This bodes ill for a democracy, because most voters — the people making decisions about how the country runs — aren’t blank slates. They already have beliefs, and a set of facts lodged in their minds. The problem is that sometimes the things they think they know are objectively, provably false. And in the presence of the correct information, such people react very, very differently than the merely uninformed. Instead of changing their minds to reflect the correct information, they can entrench themselves even deeper.

“The general idea is that it’s absolutely threatening to admit you’re wrong,” says political scientist Brendan Nyhan, the lead researcher on the Michigan study. The phenomenon — known as “backfire” — is “a natural defense mechanism to avoid that cognitive dissonance.”

These findings open a long-running argument about the political ignorance of American citizens to broader questions about the interplay between the nature of human intelligence and our democratic ideals. Most of us like to believe that our opinions have been formed over time by careful, rational consideration of facts and ideas, and that the decisions based on those opinions, therefore, have the ring of soundness and intelligence. In reality, we often base our opinions on our beliefs, which can have an uneasy relationship with facts. And rather than facts driving beliefs, our beliefs can dictate the facts we chose to accept. They can cause us to twist facts so they fit better with our preconceived notions. Worst of all, they can lead us to uncritically accept bad information just because it reinforces our beliefs. This reinforcement makes us more confident we’re right, and even less likely to listen to any new information. And then we vote.

This effect is only heightened by the information glut, which offers — alongside an unprecedented amount of good information — endless rumors, misinformation, and questionable variations on the truth. In other words, it’s never been easier for people to be wrong, and at the same time feel more certain that they’re right.

“Area Man Passionate Defender Of What He Imagines Constitution To Be,” read a recent Onion headline. Like the best satire, this nasty little gem elicits a laugh, which is then promptly muffled by the queasy feeling of recognition. The last five decades of political science have definitively established that most modern-day Americans lack even a basic understanding of how their country works. In 1996, Princeton University’s Larry M. Bartels argued, “the political ignorance of the American voter is one of the best documented data in political science.”

On its own, this might not be a problem: People ignorant of the facts could simply choose not to vote. But instead, it appears that misinformed people often have some of the strongest political opinions. A striking recent example was a study done in the year 2000, led by James Kuklinski of the University of Illinois at Urbana-Champaign. He led an influential experiment in which more than 1,000 Illinois residents were asked questions about welfare — the percentage of the federal budget spent on welfare, the number of people enrolled in the program, the percentage of enrollees who are black, and the average payout. More than half indicated that they were confident that their answers were correct — but in fact only 3 percent of the people got more than half of the questions right. Perhaps more disturbingly, the ones who were the most confident they were right were by and large the ones who knew the least about the topic. (Most of these participants expressed views that suggested a strong antiwelfare bias.)

Studies by other researchers have observed similar phenomena when addressing education, health care reform, immigration, affirmative action, gun control, and other issues that tend to attract strong partisan opinion. Kuklinski calls this sort of response the “I know I’m right” syndrome, and considers it a “potentially formidable problem” in a democratic system. “It implies not only that most people will resist correcting their factual beliefs,” he wrote, “but also that the very people who most need to correct them will be least likely to do so.”

What’s going on? How can we have things so wrong, and be so sure that we’re right? Part of the answer lies in the way our brains are wired. Generally, people tend to seek consistency. There is a substantial body of psychological research showing that people tend to interpret information with an eye toward reinforcing their preexisting views. If we believe something about the world, we are more likely to passively accept as truth any information that confirms our beliefs, and actively dismiss information that doesn’t. This is known as “motivated reasoning.” Whether or not the consistent information is accurate, we might accept it as fact, as confirmation of our beliefs. This makes us more confident in said beliefs, and even less likely to entertain facts that contradict them.

New research, published in the journal Political Behavior last month, suggests that once those facts — or “facts” — are internalized, they are very difficult to budge. In 2005, amid the strident calls for better media fact-checking in the wake of the Iraq war, Michigan’s Nyhan and a colleague devised an experiment in which participants were given mock news stories, each of which contained a provably false, though nonetheless widespread, claim made by a political figure: that there were WMDs found in Iraq (there weren’t), that the Bush tax cuts increased government revenues (revenues actually fell), and that the Bush administration imposed a total ban on stem cell research (only certain federal funding was restricted). Nyhan inserted a clear, direct correction after each piece of misinformation, and then measured the study participants to see if the correction took.

For the most part, it didn’t. The participants who self-identified as conservative believed the misinformation on WMD and taxes even more strongly after being given the correction. With those two issues, the more strongly the participant cared about the topic — a factor known as salience — the stronger the backfire. The effect was slightly different on self-identified liberals: When they read corrected stories about stem cells, the corrections didn’t backfire, but the readers did still ignore the inconvenient fact that the Bush administration’s restrictions weren’t total.

It’s unclear what is driving the behavior — it could range from simple defensiveness, to people working harder to defend their initial beliefs — but as Nyhan dryly put it, “It’s hard to be optimistic about the effectiveness of fact-checking.”

It would be reassuring to think that political scientists and psychologists have come up with a way to counter this problem, but that would be getting ahead of ourselves. The persistence of political misperceptions remains a young field of inquiry. “It’s very much up in the air,” says Nyhan.

But researchers are working on it. One avenue may involve self-esteem. Nyhan worked on one study in which he showed that people who were given a self-affirmation exercise were more likely to consider new information than people who had not. In other words, if you feel good about yourself, you’ll listen — and if you feel insecure or threatened, you won’t. This would also explain why demagogues benefit from keeping people agitated. The more threatened people feel, the less likely they are to listen to dissenting opinions, and the more easily controlled they are.

There are also some cases where directness works. Kuklinski’s welfare study suggested that people will actually update their beliefs if you hit them “between the eyes” with bluntly presented, objective facts that contradict their preconceived ideas. He asked one group of participants what percentage of its budget they believed the federal government spent on welfare, and what percentage they believed the government should spend. Another group was given the same questions, but the second group was immediately told the correct percentage the government spends on welfare (1 percent). They were then asked, with that in mind, what the government should spend. Regardless of how wrong they had been before receiving the information, the second group indeed adjusted their answer to reflect the correct fact.

Kuklinski’s study, however, involved people getting information directly from researchers in a highly interactive way. When Nyhan attempted to deliver the correction in a more real-world fashion, via a news article, it backfired. Even if people do accept the new information, it might not stick over the long term, or it may just have no effect on their opinions. In 2007 John Sides of George Washington University and Jack Citrin of the University of California at Berkeley studied whether providing misled people with correct information about the proportion of immigrants in the US population would affect their views on immigration. It did not.

And if you harbor the notion — popular on both sides of the aisle — that the solution is more education and a higher level of political sophistication in voters overall, well, that’s a start, but not the solution. A 2006 study by Charles Taber and Milton Lodge at Stony Brook University showed that politically sophisticated thinkers were even less open to new information than less sophisticated types. These people may be factually right about 90 percent of things, but their confidence makes it nearly impossible to correct the 10 percent on which they’re totally wrong. Taber and Lodge found this alarming, because engaged, sophisticated thinkers are “the very folks on whom democratic theory relies most heavily.”

In an ideal world, citizens would be able to maintain constant vigilance, monitoring both the information they receive and the way their brains are processing it. But keeping atop the news takes time and effort. And relentless self-questioning, as centuries of philosophers have shown, can be exhausting. Our brains are designed to create cognitive shortcuts — inference, intuition, and so forth — to avoid precisely that sort of discomfort while coping with the rush of information we receive on a daily basis. Without those shortcuts, few things would ever get done. Unfortunately, with them, we’re easily suckered by political falsehoods.

Nyhan ultimately recommends a supply-side approach. Instead of focusing on citizens and consumers of misinformation, he suggests looking at the sources. If you increase the “reputational costs” of peddling bad info, he suggests, you might discourage people from doing it so often. “So if you go on ‘Meet the Press’ and you get hammered for saying something misleading,” he says, “you’d think twice before you go and do it again.”

Unfortunately, this shame-based solution may be as implausible as it is sensible. Fast-talking political pundits have ascended to the realm of highly lucrative popular entertainment, while professional fact-checking operations languish in the dungeons of wonkery. Getting a politician or pundit to argue straight-faced that George W. Bush ordered 9/11, or that Barack Obama is the culmination of a five-decade plot by the government of Kenya to destroy the United States — that’s easy. Getting him to register shame? That isn’t.

Joe Keohane is a writer in New York.

-

Thanks Pete... that's really depressing.

-

@ccbiggs said:

There is no difference between a gun or a knife.

Then why buy a gun, given there's likely knives around the kitchen?

-

@pbacot said:

Thanks Pete... that's really depressing.

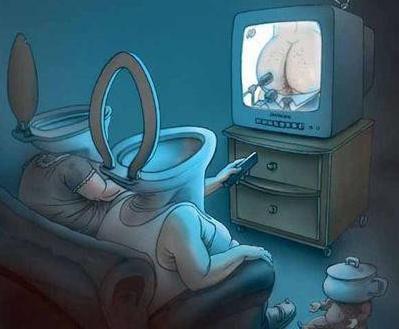

yeah.. at least the pic he posted at the end was funny

(i like the little baby with the bedpan head) -

More batshit crazy stuff from Alex the douche Jones:

-

@solo said:

More batshit crazy stuff from Alex the douche Jones:

How the hell did he pass the mental checks when buying his 50 guns?

-

@rodentpete said:

@solo said:

More batshit crazy stuff from Alex the douche Jones:

How the hell did he pass the mental checks when buying his 50 guns?

Simple answer is he never had to take any mental exams to own a gun, they are not required in Texas.

-

What can I say? What can anyone say. It seems this thread came to a grinding halt after that Video. The loony in this unbalanced, evidently Psychotic individual runs deep Scary thing is it stands to "reason" (a word the concept of which Loony Jones would have no familiarity with) that he is not alone. Very scary, vary scary indeed

-

@solo said:

More batshit crazy stuff from Alex the douche Jones:

maybe batshit crazy in the way he lets it out (he's an entertainer after-all..)

some of the stuff he's talking (or whatever that is he's doing) about though, might have some truth in there...

[edit] the thing i personally find batshit crazy about him (and i've mentioned this earlier itt), is that he's seriously contemplating/imagining/(fantasizing about?) being in some sort of gunfight with the people he's ripping on.. straight up suicide at this point in the game..

Advertisement