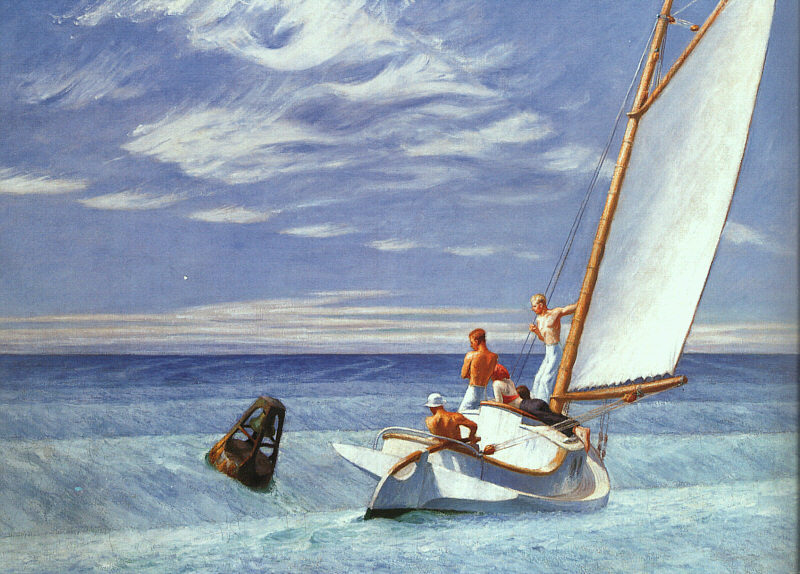

Turning a 123D Catch file set into a Edward Hopper painting

-

Roger, is it possible to post the 123d catch file you made of the boat? I'd be curious to play with it, if you don't mind sharing it.

-

I would like to know the size of file Rodger if you don't mind. Great job

-

@chris fullmer said:

Roger, is it possible to post the 123d catch file you made of the boat? I'd be curious to play with it, if you don't mind sharing it.

The boat and the water are all one. I attached call out tags to the corners of the sails and then destroyed the sails and replaced them with flat planes. If I had not been lazy, I could have then used "soap bubble" to re-inflate the sails. The problem was my lighting. On one side of the sail I was seeing reflected lighting and on the other I was seeing transmitted light. This is one of those things 123D can't interpret properly and the result was sails that looked like "croissants." As good as they taste, croissants do not work well as sails in practice.

Chris, the file is over 4MB and I can't post. Send me your email address and I will send the file to you.

-

Paul Debevic PhD wrote the following paper:

http://people.ict.usc.edu/~debevec/Thesis/debevec-phdthesis-1996.pdfIf this subject interests you, you may find some gems on this subject. I do plan to read it and see if anything sticks.

-

This is a really cool technique, Roger and I LOVE Edward Hopper!

-

Nice Roger.

Have you done anymore? -

@jpalm32 said:

Nice Roger.

Have you done anymore?

This was captured with just three photos, but the more ambient occlusion you have the more photos you need up to 70. The more fine detail quality you need the bigger your mesh file grows. This technique has potential if you are comfortable with work arounds to manage exceptions. The elephant came out quite good. You can't see it here but the ivy was a fail in terms of 3D. The ivy is a long photo textured funnel shape when seen from the side. For rectilinear buildings I think it is perhaps best to capture the mesh and convert the mesh to a locked component, then use sketchUp to do a "3D, untriangulated traceover. When the 3D traceover is complete, go back and erase the complex mesh except where you might want to keep irregular/hard to define surfaces.

Lets say I was doing the original design for the Bangkok hotel where this photo was taken. I would do the main body of the hotel in SU and then do a 3D photo session with and elephant. Then I would plug the elephant mesh into the hotel with a notice to the artisans sculpting the elephant to do the best interpretation of MY elepant as THEY can.

-

What is the purpose of the reference line tool? How can it be used to build a better model in SU.

-

Amazing and cool technique

-

A problem I am having is that the OBJ files that I export are often too big for sketchup to load when they are don at high resolution and sometime I even have the problem at standard resolution. Cell phone resolution always seems to load. However, the lower resolution of the original 123d Catch file, the more likely the file is to have have those nasty tornado shapped funnels connecting ht 3D object to the background. Now this surprises me because the native highb res 123d Catch files load even though tehy are also carrying texture as well as shape information. Even though the 123d Catch file is is created in the cloud I view it and twist it in space on an app that is resident on my computer.

The high res filoes created are also to big to open in Mesh Lab. So I guess this is just a SU capacity problem. What are the chances that SU9 will be able to manipulate larger files?

-

@roger said:

What are the chances that SU9 will be able to manipulate larger files?

We've been asking since SU6.

I give up on some OBJ imports, from wings3d for example, there's a certain poly limit then I just can't import (or I get strange behaviour, missing geometry etc)

-

@olishea said:

@roger said:

What are the chances that SU9 will be able to manipulate larger files?

We've been asking since SU6.

I give up on some OBJ imports, from wings3d for example, there's a certain poly limit then I just can't import (or I get strange behaviour, missing geometry etc)

Do you think the poly limit is specific to your machine or does it have to do with the SU code. Any idea about how such a bottleneck works?

-

@unknownuser said:

Seems a lot of Hooper paintings will be soon in France, next week !

(in English!

(in English!

We have a copy of that one hanging in the bathroom. I just looked at your post and realized for the first time the painting is called "The long leg."

Oh, its Hopper not Hooper.

Oh, its Hopper not Hooper. -

Seems a lot of Hopper paintings will be soon in France, next week !

(in English!

(in English!

-

Oops!

Corrected

Corrected

-

Hopper is to SketchUp as Winslow Homer is to your favorite render engine.

-

Yes an another great one!

-

-

I had the luck to see the exibition of Hopper today!

Very cool ! Color, light, composition, mysterious mood!

The only famous boat painting was this one

Advertisement