HDRI's

-

Wow roger! You are clearly a pro! That is one awesome pano!

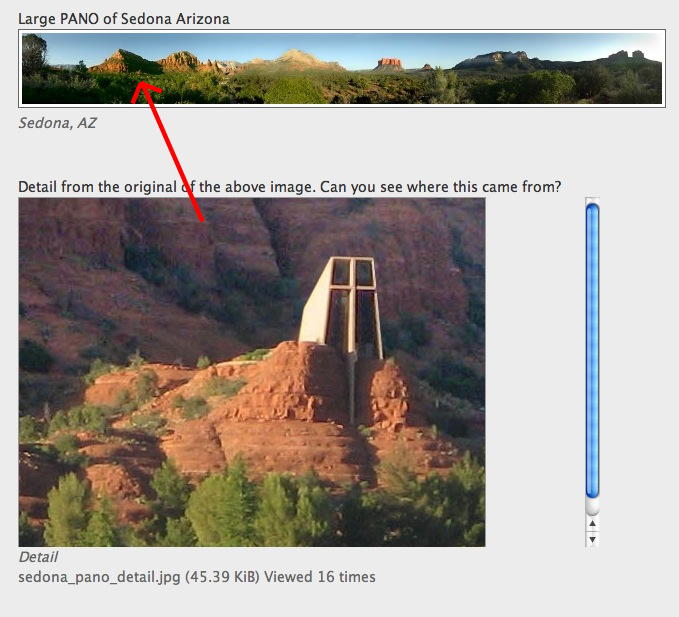

Uh... So Im guessing the detail is from the left of the original, right between that big tree and that little one? -

Yeah great info Roger!

Is the detail from the left (maybe 25% in) just under the shadow of the mountain?

-

My guess

-

Wow FireyMoon, close, but not quite. The mountain on the left has a sunny side and a shadow side. At the bottom of the shadow side and just above the tree line are a couple of reddish pixels. The inset detail comes from the area those pixels represent.

I actually was working on design of a pano head and was using SketchUp as my design tool. I was going to order extrusions from the 80/20 company to build some of the components. They offered a custom design service for special components not in the catalog. I designed a special rotating part and sent the drawing to their design department for custom manufacturing, but they said they would not take SketchUp as an input when, in fact, I had made conventional plans and elevations as well as 3D views. They go to great lengths to create comprehensive catalogs and operate a custom manufacturing department, but it is all for naught when you have to talk to someone with a lot of wax in their ears.

-

Ah Mike et al, you got there before me. Thank you.

-

They don't call me old eagle eye for nothing ..... trouble is I sometimes see to much and that can get you into trouble

-

On the subject of panos, I came across AutoStitch for the iPhone http://www.cloudburstresearch.com/autostitch/autostitch.html I tend to use the iPhone for much of the pics I take and find AutoStitch very useful. Its good value at $2.99!

-

Thanks Roger for that detailed explanation. A question... to create a hemispherical global, is it a matter of lens, or do you have to rotate the camera on the z axis as well?

-

honolulu I posted this to learn also, but I will try to answer your question, and maybe someone with more knowledge than me can jump in.

Dynamic range can be referred to as a ratio. In a scene it is the ratio between two luminance values, in essence the lightest and the darkest. By taking a photograph of the same scene using different exposures, and then using computer software you are able to extract greater detail from these exposures, from smallest unit a computer can store, the bit. A quote I read and kept around ...... "Therefore, an HDR image is encoded in a format that allows the largest range of values, e.g. floating-point values stored with 32 bits per color channel.

Another characteristics of an HDR image is that it stores linear values. This means that the value of a pixel from an HDR image is proportional to the amount of light measured by the camera. In this sense, HDR images are scene-referred, representing the original light values captured for the scene.

Whether an image may be considered High or Low Dynamic Range depends on several factors. Most often, the distinction is made depending on the number of bits per color channel that the digitized image can hold." here is a link to that discussion http://www.hdrsoft.com/resources/dri.html#dr

I am interested in them more for rendering, than strictly photography, as when I use them as a source for lighting in rendered images, the results always seem much better to me. -

I came across a very beautiful HDRI Photo on the website of CCY Architects http://www.ccyarchitects.com/ Since the site contained no copyright notice, I will put it up here and gladly remove it if it offends them. Perhaps the phootgrapher will come forward and take credit.

-

@dale said:

Thanks Roger for that detailed explanation. A question... to create a hemispherical global, is it a matter of lens, or do you have to rotate the camera on the z axis as well?

The answer is, it depends.

You can use a 180 degree fisheye lens and lay on your back looking up and get a hemisphere in one shot. Or you take the other extreme and shoot a hundreds of shots with an extreme telephoto (in the x,y and z axes and stitch them together).The difference between the two is amount of work and resolution. The fish eye will be in the 5 to 10 megabyte range. The stitched image could be 40, 100 or even more gigabytes.

This is MAC (The Mesa Arts Center)in Mesa, Arizona. If I remember right this is composed of a matrix of 12 photos (4 across by 3 high). I guess the FOV might be 120 degrees. I had to do a fair amount of PS to get rid of the worst distortion. For comparison SketchUps dfault FOV is 35 degrees and I estimate our sharp field of view to be about 12 degrees.As humans we stitch the images in front of us all the time, but we do it in our brain and in our memory. At 12 degrees we are really looking through a keyhole, but as the eye scans a scene it gathers many views and stitches them together. In fact this is also HDRI as I took a normal and underxposed view of ever position so I could keep the sails from over exposing. We are looking at two layers of 12 shots each.

Advertisement