Drawing images to the SketchUp viewport

-

The SketchUp API lacks a method to draw images to the viewport. It's still open, whether such a method should also support sizing (variable width/height) or even matrix transformations (to draw textures). No matter what, OpenGL image drawing is certainly better performant than drawing pixel by pixel.

So that is what this experiment is about: How far can we get with the current API methods? The method

draw2d(GL_Points, Array<Geom::Point3d>)allows us to draw individual pixels. Has anyone used it in larger scale?

I used it here to draw PNG images to test whether it makes sense to apply it in a plugin, or rather wait until an API method is added.Version: 1.0.2

Date: 28.06.2014

Usage:

Install the attached plugin in SketchUp.

Menu Plugins → [Test] PNG Loader Tool

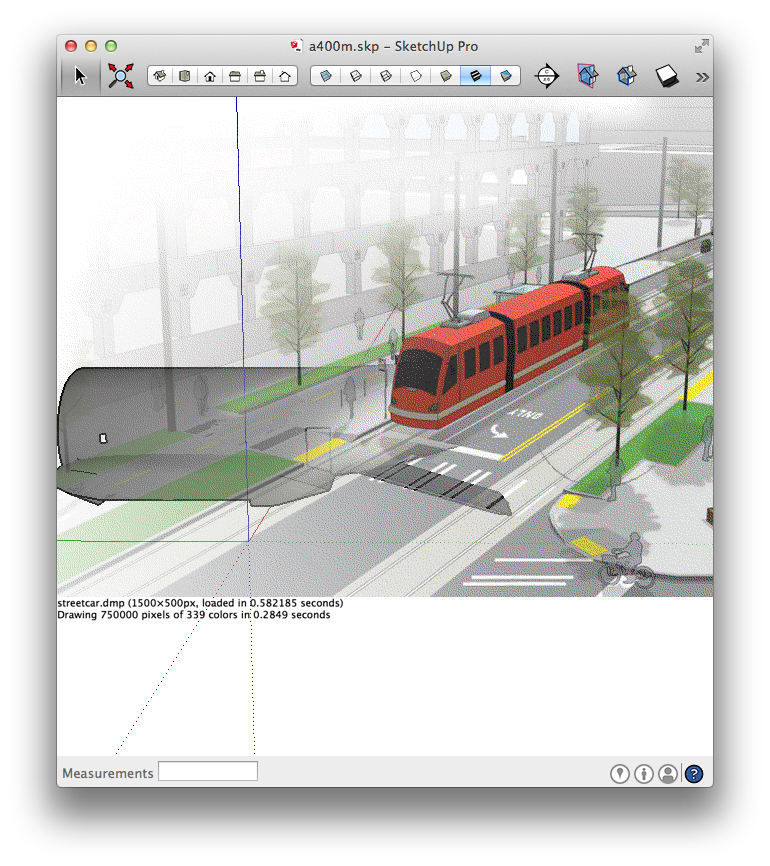

It will draw a PNG image. By left-clicking you can switch to bigger images. By double-clicking you can try to load your own PNG image (SU 2014).

For loading new png images, I use ChunkyPNG, a pure Ruby library. However, it requires some of the standard libs that are shipped with SketchUp 2014. And it appears it is not able to read every PNG file, but only those with special encoding (255 indexed web-save colors). But it's enough to show the principle. It seems the biggest obstacle is to read image files. (Another API request?: expose internal image reading methods)

The idea is to change the structure of the image data by sorting pixels by color. To each color is assigned a list of pixel coordinates, which can efficiently be drawn with the API method. This data is then marshalled and stored in a file.

-

Very interesting.

Althought, your test doesn't work for me. Nothing is drawn to the screen, and left-clicking doesn't do anything. When dbl-clicking and loading "Plugins\ae_PNGLoader\png\sketchup100.png", I got the following error : (Win7, SU14)

As a side note, I wonder how LightUp and the latest Thea overlay work.

The LightUp overlay happens in a tool, so it might use a similar technic as you did. But Thea overlays the render even when using any tool. -

-

I was trying it some while back. But I found that different graphic cards drew pixels differently. I had nVidia and ATI cards and I found that one of the brands drew the pixels blurred. Only way to found to draw crisp images on all graphic cards that I tried was to use GL_LINES to draw each pixel as a line - offsetting by half pixels to ensure things where drawn properly.

This is what the current version of Vertex Tools does. But it's terribly slow - especially if you had many different colours. I was doing what you did, sorting pixels by color to draw similar ones in bulk. Though I used BMP files only as it was easy to write a BMP reader in Ruby. -

@tt_su said:

I was trying it some while back. But I found that different graphic cards drew pixels differently. I had nVidia and ATI cards and I found that one of the brands drew the pixels blurred. Only way to found to draw crisp images on all graphic cards that I tried was to use GL_LINES to draw each pixel as a line - offsetting by half pixels to ensure things where drawn properly.

This is what the current version of Vertex Tools does. But it's terribly slow - especially if you had many different colours. I was doing what you did, sorting pixels by color to draw similar ones in bulk. Though I used BMP files only as it was easy to write a BMP reader in Ruby.The reason they were blurred is sample positions / fill rules.

For many years DirectX had an odd convention of sampling the top-left corner of a pixel but the center of a texel. They did finally correct this in DX9 (or perhaps DX10). It meant you had to offset everything by 0.5 pixels to ensure that it was filtered correctly - ie if you wanted 1 texel to map precisely to 1 pixel, you'd need to offset.

I guess ATI used the same fill-rule for OpenGL too (which has always been consistent and correct on this).

Adam

-

Yea, it only happen when Anti-aliasing is enabled. When off it looks fine regardless.

-

Found my notes on GL_POINTS - why I had to resort to GL_LINES:

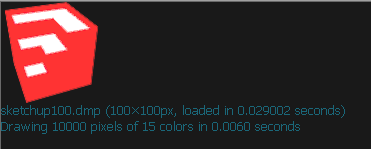

# nVidia on the other had has another problem where GL_POINTS appear to # always be blurred when AA is on - offset or not. -

# ATI cards appear to have issues when AA is on and you pass # view.draw2d(GL_POINTS, p) integer points. The points does not appear on # screen. This can be remedied by ensuring the X and Y co-ordinates are # offset .5 to the centre of the pixel. This appear to work in all cases on # all cards and drivers. # # nVidia on the other had has another problem where GL_POINTS appear to # always be blurred when AA is on - offset or not. # # To work around this, draw each point as GL_LINES - this appear to draw # aliased pixels without colour loss on both systems. There is a slight # performance hit to this, about 1/3 or 1/2 times slower. -

And this is how it looks like on nVidia with AA enabled:

Advertisement